Chapter 3 Modeling

I have two separate aspects to modeling: forecasting the point spread throughout the week and predicting the score of the game. For both processes, I tried different approaches to modeling and chose the best performing model based on performance on test datasets.

3.1 Point Spread Forecasting Model

I forecasted the point spread throughout the week by treating this object as a time-series. I explored the data with an aim to find the best approach to modeling, before then moving into the modeling procedure. The best performing model was a time-varying Bayesian Dynamic Linear Regression model that used ARIMA (Autoregressive Integrated Moving Average) methods to forecast the time-varying parameters that are used to forecast the point spread in the Dynamic Linear Regression Model. In addition, for utilizing the model, I needed to determine how many data points will be in the series. I used a mixed linear regression model for this purpose.

3.1.1 Exploratory Data Analysis

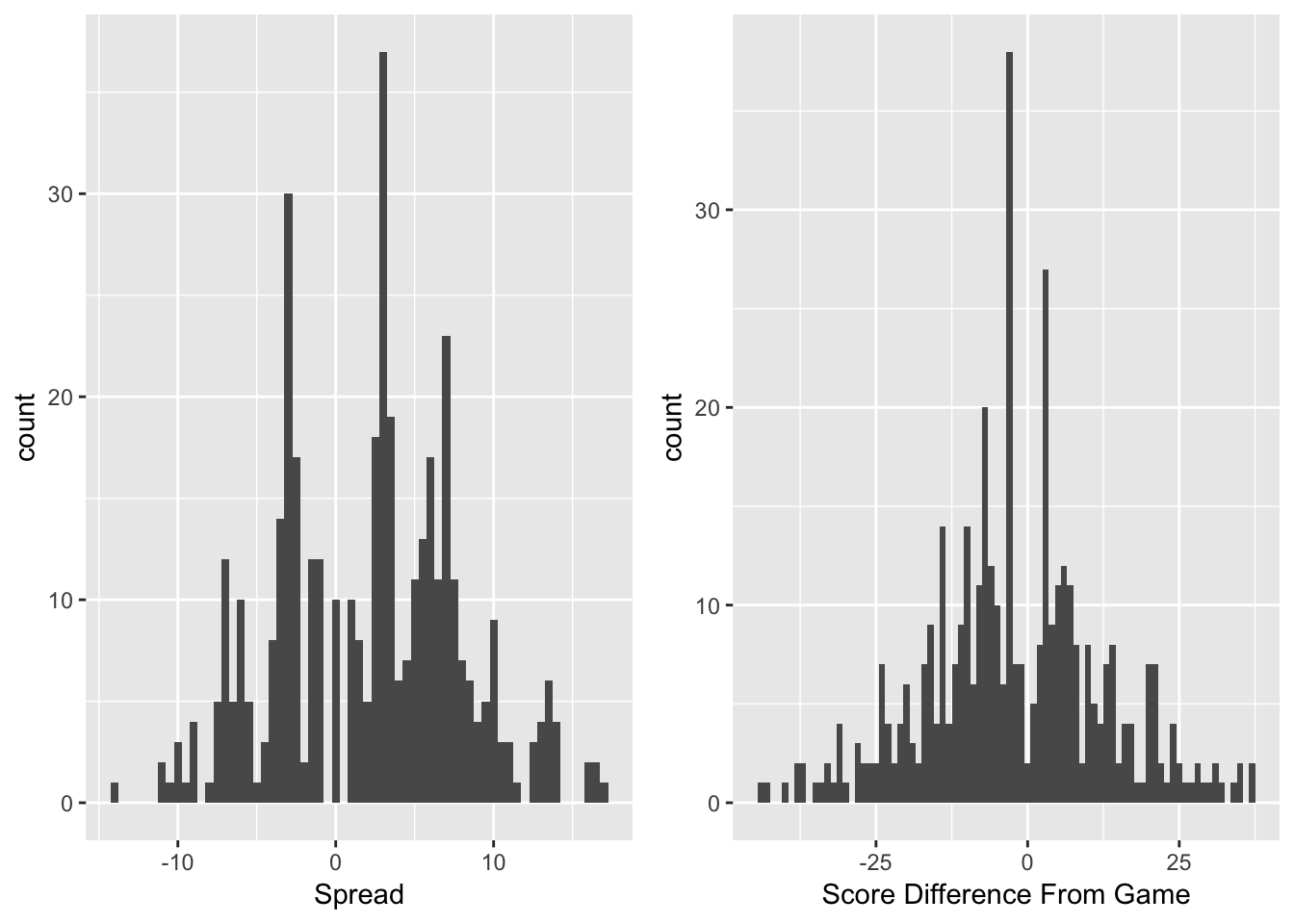

Figure 3.1: Histograms of Point Spreads and Score Differences from Games

The first aspect to examine when forecasting the spreads is the distribution of spreads. It is also important to look at the distribution of game outcomes that these spreads model. Figure 3.1 shows both of these distributions. Both have multiple peaks. These multiple peaks arise because in football, nearly all scores are worth \(3\) or \(7\) points. When predicting the difference between two teams, many games will end up with a forecasted spread near these key numbers, and the results of these games will fall at these numbers often. In addition, there are a few dead zones – mainly in between \(0\) and \(3\). The results of the games mirror the distribution of the forecast spreads, however, with a much wider distribution. It is difficult to forecast a blowout game, but they do occur, which is why there are much longer tails for the true score differences.

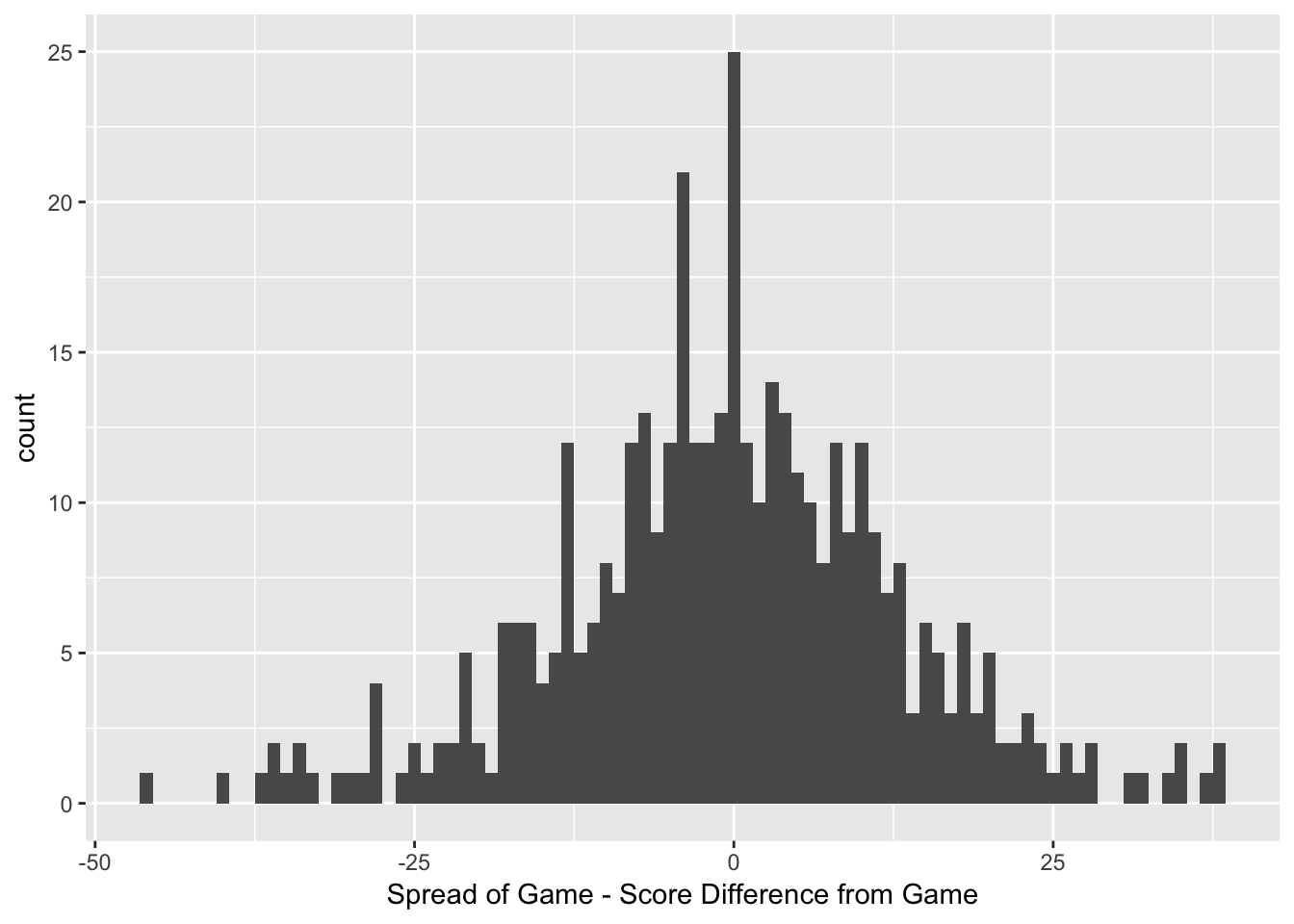

Figure 3.2: Histogram of Game Results against Spread

Figure 3.2 shows a distribution of the result of the game against the spread. A result of \(0\) would indicate that the game ended with the same result as the spread, and the result of the game would be a push, meaning that nobody wins and the bettor’s stake is returned to the better. To demonstrate the accuracy of the bookmaker’s, it is evident that the distribution is relatively normally distributed around \(0\), with a second peak at \(-3\) indicating that many of the games resulted in the home team beating the spread by \(3\) points.

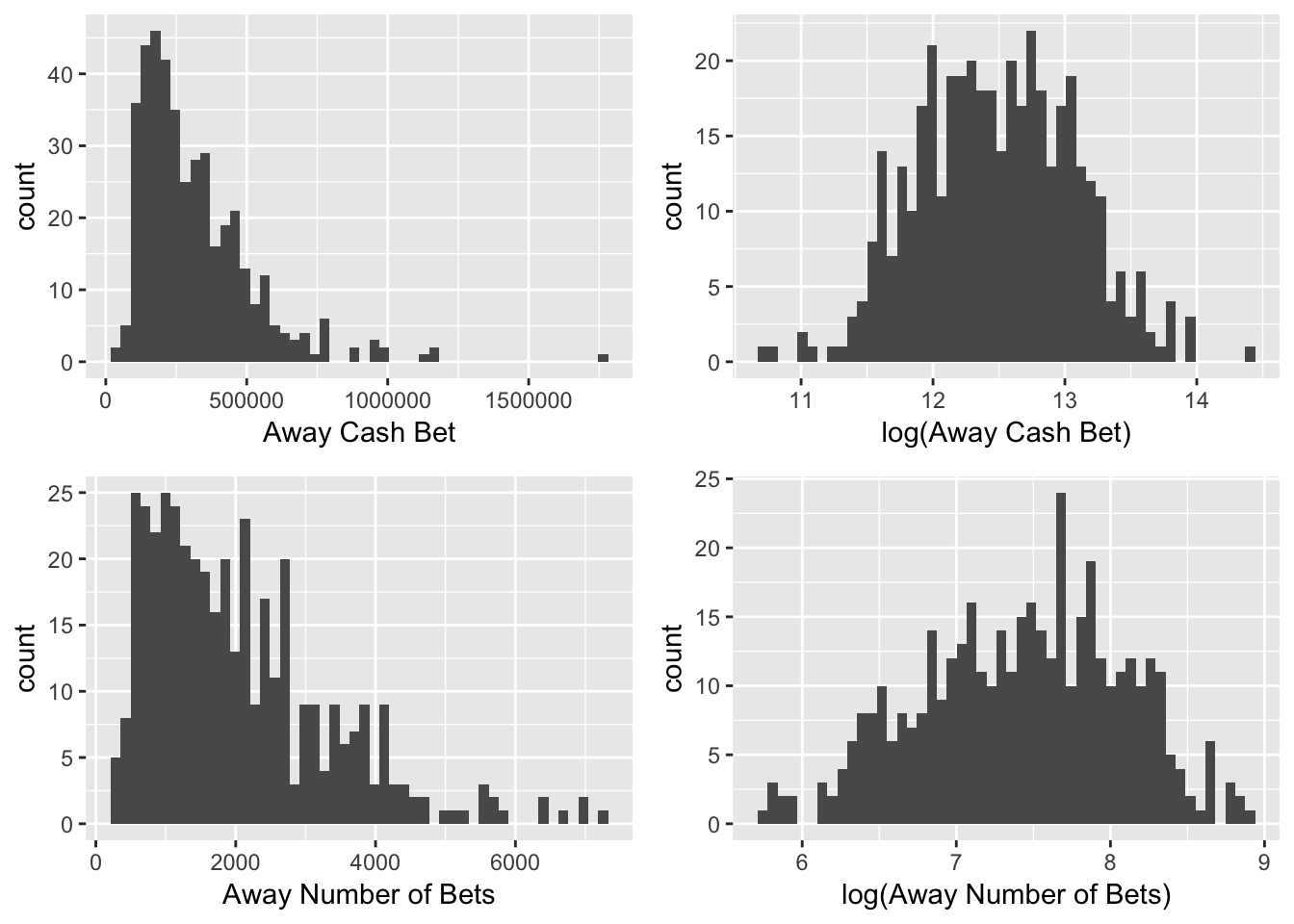

Figure 3.3: Transformations of Key Betting-Statistic Variables

Research suggests that casinos adjust the line based on the amount of cash bet on each side, so that they can even out the amount of money bet on each time and guarantee themselves a return. Examining the cash variables can help evaluate this research. Figure 3.3 demonstrates the skewness of the cash and ticket number variables, as well as updated distributions after transformations. The cash variable is very right-skewed. For modeling and interpretability, it is integral to transform this variable into the log of the cash bet. The number of bets on each side is also right skewed. The \(\log(\text{Away Cash Bet})\) and \(\log(\text{Away Number of Bets})\) are both significantly closer to normally distributed.

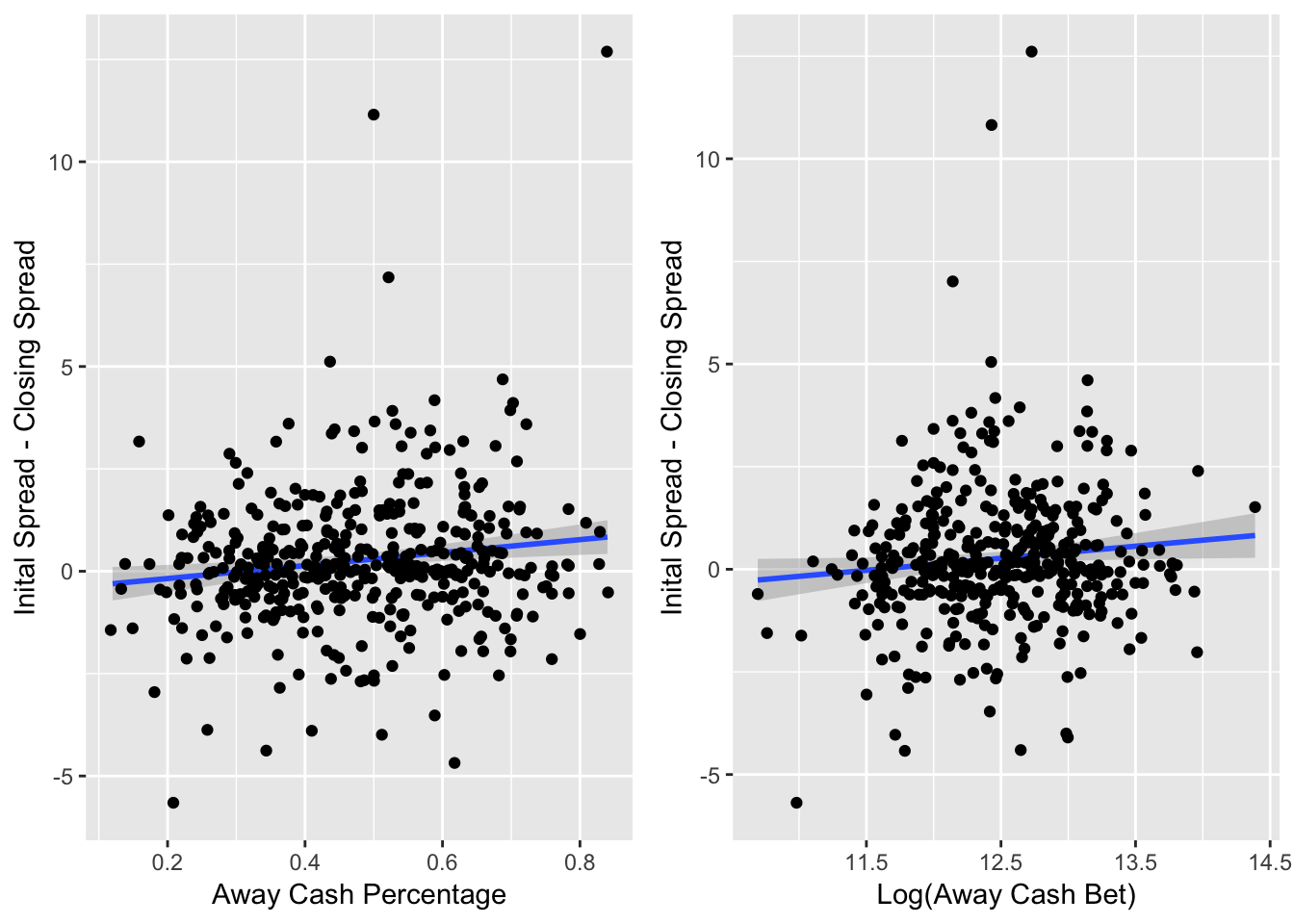

Figure 3.4: Line Difference versus Key Variables

Figure 3.4 shows the the line difference from when the casino first listed the spread to the spread when the game started compared with the cash percentage and the \(\log(\text{Away Cash Bet})\). Research suggests that with more cash bet on the away team, the casino would want to make the spread less favorable for the away team, in an effort to get more money placed on the home team and achieve a 50/50 split.

Here, while the effect is not major, for both the away cash percentage and away cash amount variables, as they increase, the line difference for the away tends to become more negative. This means that when more cash is on the away team, the spread tends to become more favorable to the home team. For example, a line difference of -2 means that the initial spread could have been the away team is favored by 6 points (-6), but then the spread moved to make the away team favored by 8 points (-8). The away team must now win by more than 8 points to cover the spread, opposed to the previous point where the away team only needed to win by more than 6 points.

There are a few outliers where the line difference is greater than 5 points. This sort of extreme movement only can occur due to big player news. For example, if there is news on the Friday leading up to the game that Tom Brady is injured and cannot play, this would cause a massive swing in the line that would not be related to the cash and ticket percentages.

3.1.2 Modeling the Point Spread

After exploratory data analysis, the next step is finding the best model to forecast the future spread. The model needs to forecast what the spread will be from a certain decision point. This first decision point is the first point when bets will be placed. The chosen decision point is after two-thirds of the observations in each time-series. The data frame containing the observations for each game is cut off at the two-thirds mark, and the model then forecasts the point spread for the final one-third of observations, using only the information up to this two-thirds point. I consider a Bayesian and frequentist approach to modeling the point spread. After forecasting the point spread for the final one-third observations, I calculate the error for each model by finding the difference between the forecasted point spread and true point spread for each observation. I use the forecasts from the model with the lowest average error across all my time-series’ in my betting strategies.

The Bayesian approach to modeling is a time-series random walk plus noise regression model. The process starts by placing a prior for the parameters in my model before updating these parameters with the posterior mean through finding the MLE of the parameters of this regression model. The regressors in the model are the \(\log(\text{Away Cash Bet})\), Away Number of Bets (Away Ticket Number), \(\log(\text{Home Cash Bet})\) and Home Number of Bets (Home Ticket Number).

The full process of creating the dynamic linear model is demonstrated through the example of the Week 2, 2018 game between the Minnesota Vikings and the Green Bay Packers.

Equations and express a dynamic linear regression model with time-varying parameters.

\[\begin{eqnarray} y_{t} =& \textbf{X}_{t}^{'} \ \theta_{t} + v_{t} \hspace{.5cm} v_{t} \sim& N(0, V_{t}) \text{; (observation equation)} \label{eqn:basic_dlm} \\ \theta_{t} =& G_{t} \ \theta_{t-1} + \omega_{t} \hspace{.5cm} \omega_{t} \sim& N(0, W_{t}) \text{; (evolution equation).} \label{eqn:basic_dlm2} \end{eqnarray}\]The vector of observations up to time \(t\) is \(y^{t} = (y_1, ..., y_t)\). The observation equation (equation 5) describes the vector of observations \(y_{t}\) (the spread at time \(t\)) through its State vector \(\theta_{t}\) (the predictor variables at time \(t\)) and the vector of noise from the observations \(v_t\). The evolution equation (equation 6) describes the evolution of the state vector over time with a Markov structure. \(\theta_{t}\) is the state vector of the time-varying regression parameters (of number \(p\)); \(\theta_{t} = (\alpha_{t} \ ; \beta_{t})^{'}\) with dimension \(p \times 1\). \(\alpha_{t}\) and \(\beta_{t}\) are the regression coefficients \(\textbf{X}_{t}^{'}\) is the row vector of covariates at time t of dimension \(1 \times p\). \(w_t\) is the variance of the state-space vectors. \(G_{t}\) is an evolution matrix of \(p \times p\) dimension. This is the evolution matrix because it allows for the evolution of the state space vector by matching up the dimensions the parameters. \(G_{t}\) is typically, and in this model, an identity matrix.

This is the general setup for a dynamic linear regression model. Equations — show the expansion of equation .

\[\begin{eqnarray} y_{t} = \alpha_{t} + \beta_{t} \ \textbf{X}^{'}_{t} + v_{t} \hspace{1cm} v_{t} &\sim& N(0, V_t) \label{eqn:gen_dlm1} \\ \alpha_{t} = \alpha_{t-1} + \epsilon_{t}^{\alpha} \hspace{1cm} \epsilon_{t}^{\alpha} &\sim& N(0, \sigma^{2}_{\alpha}) \label{eqn:gen_dlm2} \\ \beta_{t} = \beta_{t-1} + \epsilon_{t}^{\beta} \hspace{1cm} \epsilon_{t}^{\beta} &\sim& N(0, \sigma^{2}_{\beta}) \label{eqn:gen_dlm3} \end{eqnarray}\]There are three parameters that need to be set, and that is the variance of the observations \(V_t\), and then the variances of the regression coefficients for the state-space vector – \(\sigma^{2}_{\alpha}\) and \(\sigma^{2}_{\beta}\).

This can be done through a Bayesian method, where the initial parameter start values are set, and then through finding the MLE of the DLM using the , these parameters are updated with the posterior mean. I used sample observational variance of the spread up to the first decision point as the starting value of the observational variance \(V\). I used a flat prior for the variances of the regression parameters have a flat prior. Table 3.1 shows the values for the prior and posterior means of the variance parameters.

| Prior Parameters | Posterior Parameters | |

|---|---|---|

| \(V\) | 0.5261619 | 0.0040991 |

| \(\sigma^{2}_{\alpha}\) | 0.0000000 | 0.0996949 |

| \(\sigma^{2}_{\beta}\) | 0.0000000 | 0.0000000 |

The posterior mean for the \(\sigma^{2}_{\alpha}\) and \(\sigma^{2}_{\beta}\) values are used diagonally in the \(\omega_t\) matrix. Looking back at equations and , \(\theta_t\) for each observation is found through using \(\alpha_t\) and \(\beta_t\) values, which are drawn through \(\sigma^{2}_{\alpha}\) and \(\sigma^{2}_{\beta}\). The values of the design vector \(\textbf{X}^{'}_{t}\) comes directly from the predictors and the variance for \(V\) is set. Thus, all the parameters needed for modeling are set, and I use a dynamic linear regression model through the function to calculate my values for the observational values (\(y_t\)) and the state-space parameters (\(\theta_t\)). This is done through the filtering method.

The filtering distribution takes in the DLM, and returns a series of one-step forecasts for the observations. These one-step forecasts are created from filtering all the information up to time \(t\). The first step of the filtering distribution has a starting value \(\theta_0 \sim N(m_0, C_0)\). \(m_0\) and \(C_0\) are the pre-sample means and variances for \(\theta\).

Creating a filtered distribution with the function returns a series of one-step forecasts and variances for the observations, as well as the same information for the state-space vector.

For a time-invariant dynamic linear model, there would be no extra work for finding a forecast for the observations after a given point \(t\). But, for a time-varying model, such as this, the \(\textbf{X}^{'}_{t}\) values are also unknown past the given point \(t\). The Kalman filtering method extends the time-series with new future predictor values, but does not input future values for the observational values. Once the future predictor values are entered, I create a filtered distribution with this new set – using the filtered values of the extended observational values as my forecast.

There are a few common methods for finding new methods for the predictor values, such as inputting the last known observation, the mean or the median. However, since my predictor values continue to grow, these methods do not apply to this model. So, at the decision point, I fit ARIMA models for each of my new predictor values. I used the method to generate these new values for each of my predictor variables. Using the ARIMA method is a frequentist approach to a time-series forecast. I used this approach because for two reasons: it is unrealistic to build a separate Bayesian DLM for each parameter and these parameters simply grow without fluctuation (unlike the point spread), so it is not as necessary to build as complex of a model. There are three parameters that go into that ARIMA method: is the number of lag observations in the model, is the degree of differencing and is the order of the moving average.

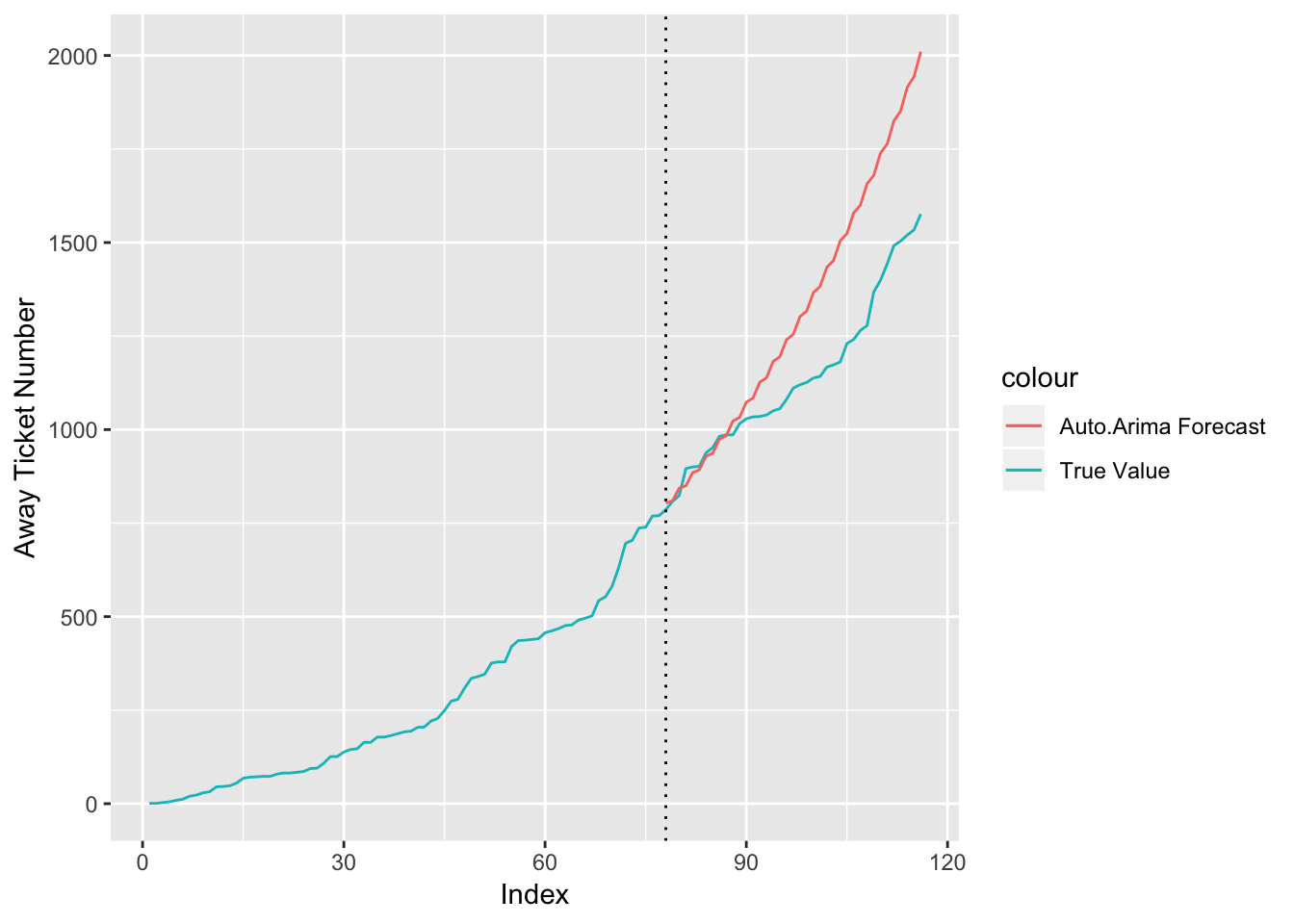

The function automatically chooses the best and values that will minimize the AIC and BIC of the model. However, by setting the seasonal parameter to “false”, I ensured that no model that incorporated a seasonal trend is chosen because that would not fit these data. Figure 3.5 is the forecasted number of tickets versus the true number of tickets for the Green Bay Packers versus Minnesota Vikings game. While this forecast is certainly not perfect, it generally follows a similar path to the true value. This is certainly an imperfect method and one area for improvement in this facet of the model.

Figure 3.5: Forecasted versus True Away Ticket Number

This forecast model for the number of tickets is an ARIMA(1, 2, 2) model that is expressed in Equations and .

\[\begin{eqnarray} \hat{Y_t} =& \hat{y_t} + 2Y_{t-1} - Y_{t-2} \label{eqn:arima112} \\ \hat{y_t} =& \mu + AR1 \cdot y_{t-1} - MA1 \cdot e_{t-1} - MA2 \cdot e_{t-2} \label{eqn:arima1122} \end{eqnarray}\]Table 3.2 displays the coefficients to the ARIMA(1, 2, 2) model.

| Coefficient | |

|---|---|

| AR1 | -0.9895154 |

| MA1 | 0.1705304 |

| MA2 | -0.4278727 |

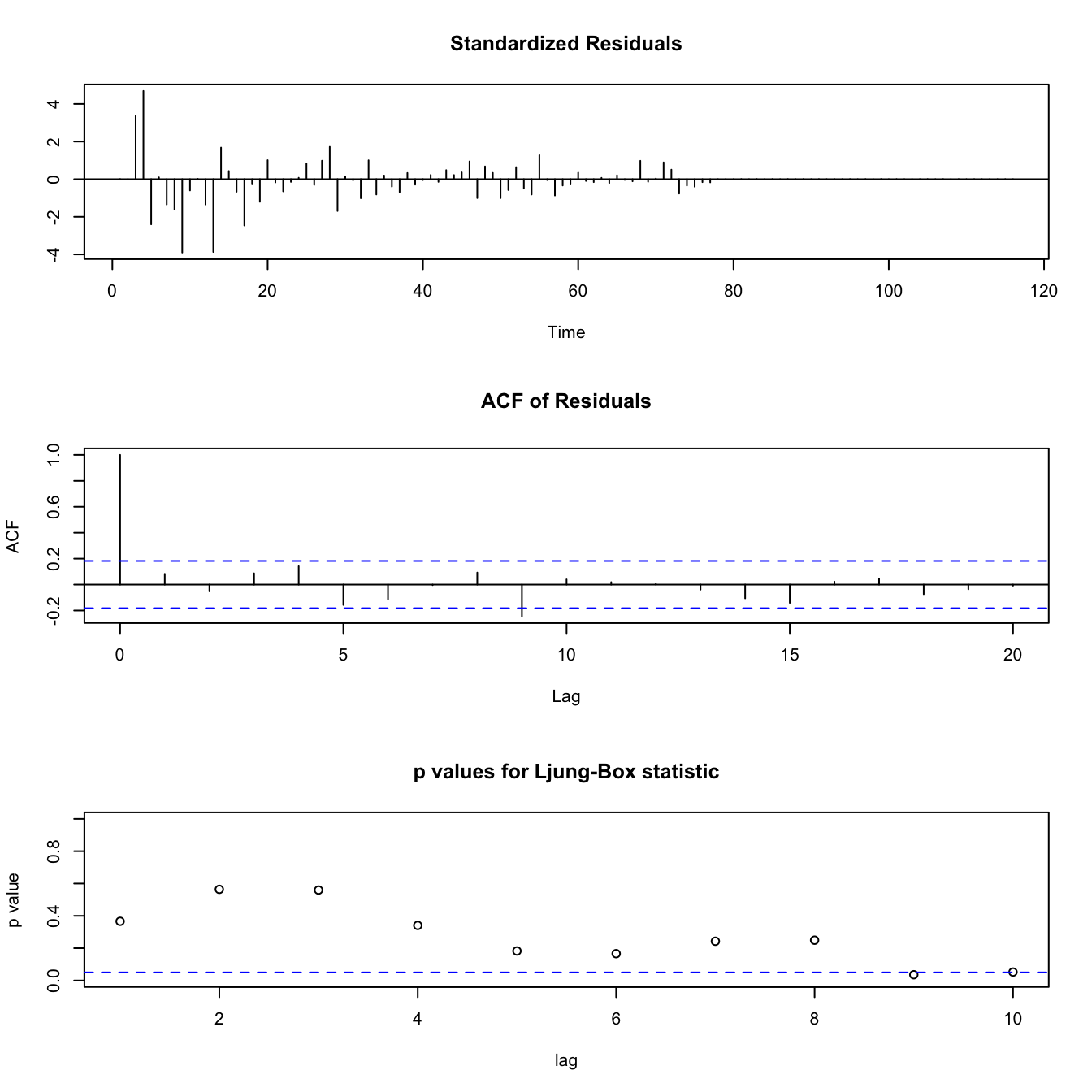

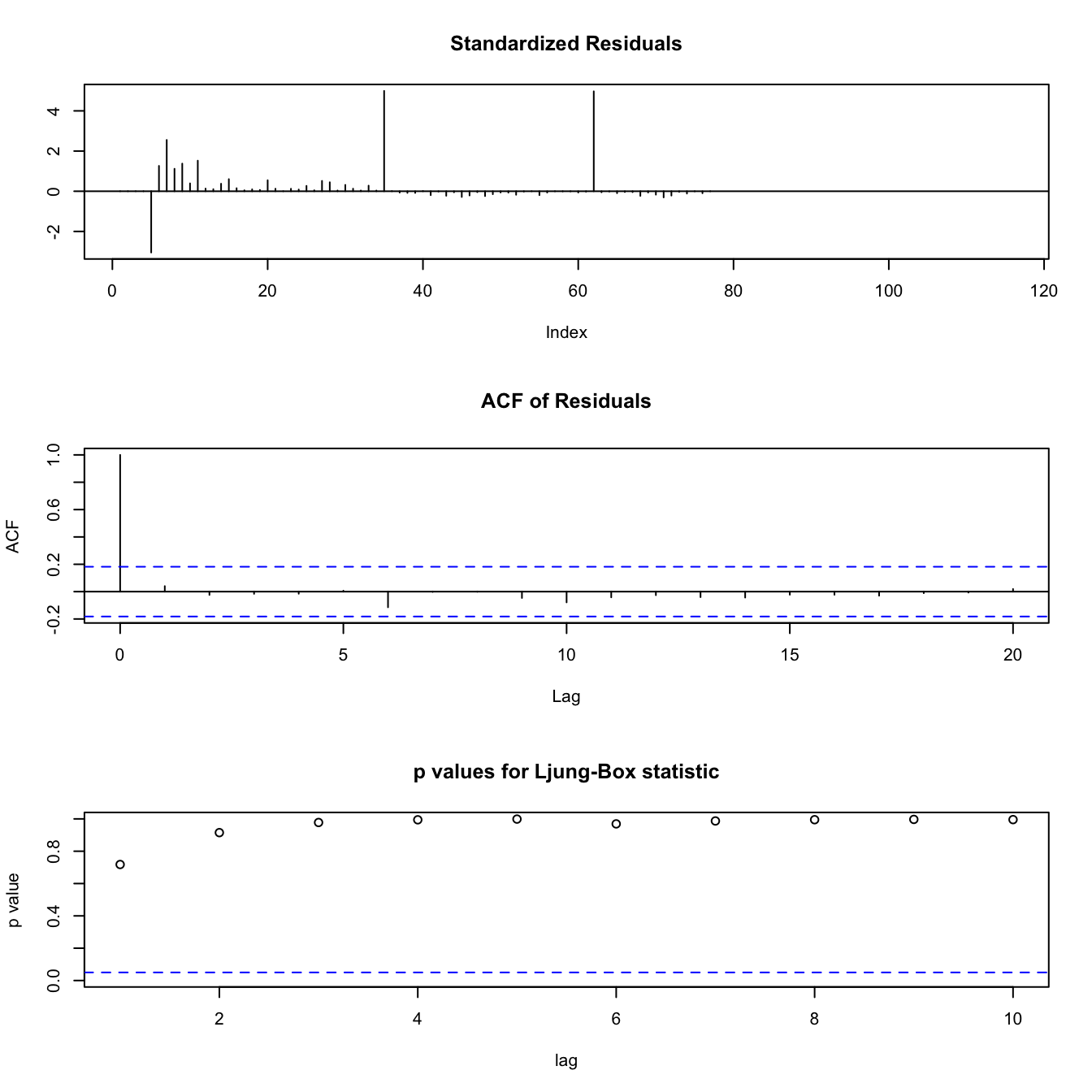

(#fig:fig:diag112)Diagnostic Plots for ARIMA(1, 2, 2) Model for Away Ticket Number

Figure ?? is the diagnostic plots for the method for forecasting the number of tickets for the away team. The plots show that this model is a pretty good fit for the data, as the standardized residuals generally look like white noise, though the p-values for autocorrelation become significant when the lag factor reaches high values such as 9. As these models are automatically fitted to best describe the data at hand, they generally fit the data pretty well.

It is important to note that the automatic ARIMA is fit for each different new variable from each time-series (opposed to using the same ARIMA model for the cash bet for all series) because the trends are not the same across all series. While bookmakers generally look to obtain 50/50 amount of cash on each game, this is certainly not always the case, as bookmakers will take a position on many of the games. Thus, the automatic ARIMA model will fit the model best to the data for each of the predictor variables.

Finally, after generating new values for the predictor variables in my DLM, the Kalman filtering method can be used to find predictions for the spread. This method follows the exact same approach as above, however, the one-step forecasts for the last third of observations will replace the NAs.

In addition, for comparison, the spread is also modeled with the forecast, using the same predictor variables as the Bayesian DLM as regressors. This is a frequentist approach for modeling each time-series. The accuracy of each approach is determined by looking at the average error in the predicted spread values versus the true spread values.

For this example game between the Green Bay Packers and the Minnesota Vikings, the method fit an ARIMA(1, 0, 0) model, which is a first-order autoregressive model.

Equation expresses this model. \[\begin{equation} \label{eq:arima100} Y_t = c + \phi_{p} Y_{t-1} + \epsilon_t \hspace{.5cm} \epsilon_t \sim N(0, \sigma^{2}_{\epsilon}) \end{equation}\]\(c\) is the intercept or the constant in the equation and \(\phi_{p}\) is the vector of coefficients for the autoregressive term (AR), as well as all the predictors. Table 3.3 shows the coefficients of this model and the variance parameter \(\sigma_{\epsilon}^{2}\) = \(0.00595\).

| Coeffecient | |

|---|---|

| AR1 | 0.8548193 |

| Intercept | -2.4132144 |

| Log Away Cash | -0.0153527 |

| Log Home Cash | 0.0008310 |

| Away Ticket Number | -0.0543334 |

| Home Ticket Number | 0.0022097 |

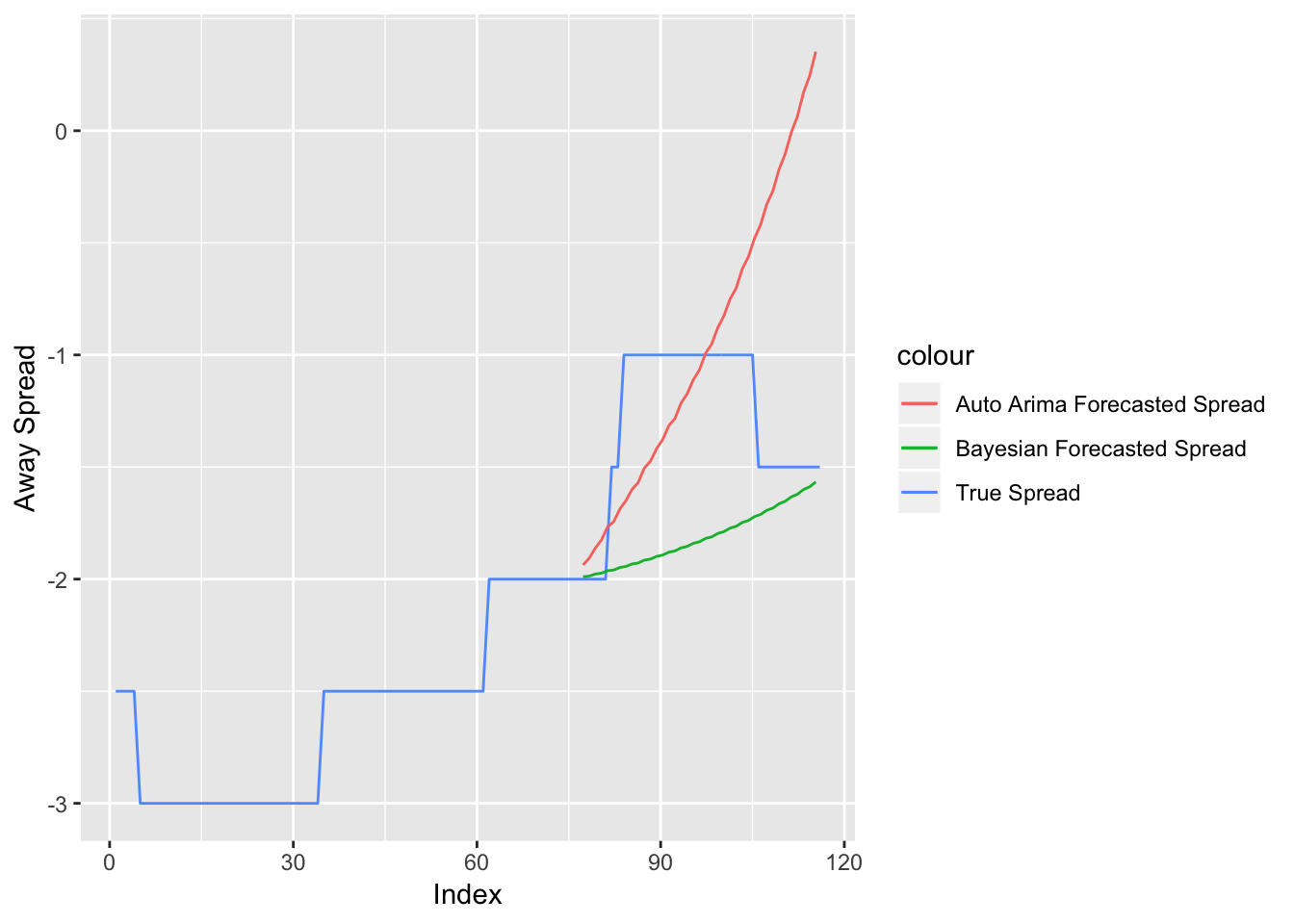

Figure 3.6: Spread versus Forecasts for Minnesota Vikings at Green Bay Packers Week 2, 2018

Figure 3.6 compares the Bayesian DLM and the frequentist ARIMA model’s predictions with the true final spread values from the game between the Minnesota Vikings and the Green Bay Packers. The blue line represents the true spread, while the red and green lines represent the Bayesian and frequentist forecasts, respectively. Both forecasts correctly predict the spread to rise. However, the Bayesian approach does a better job, in this scenario, of being closer to the true spread values.

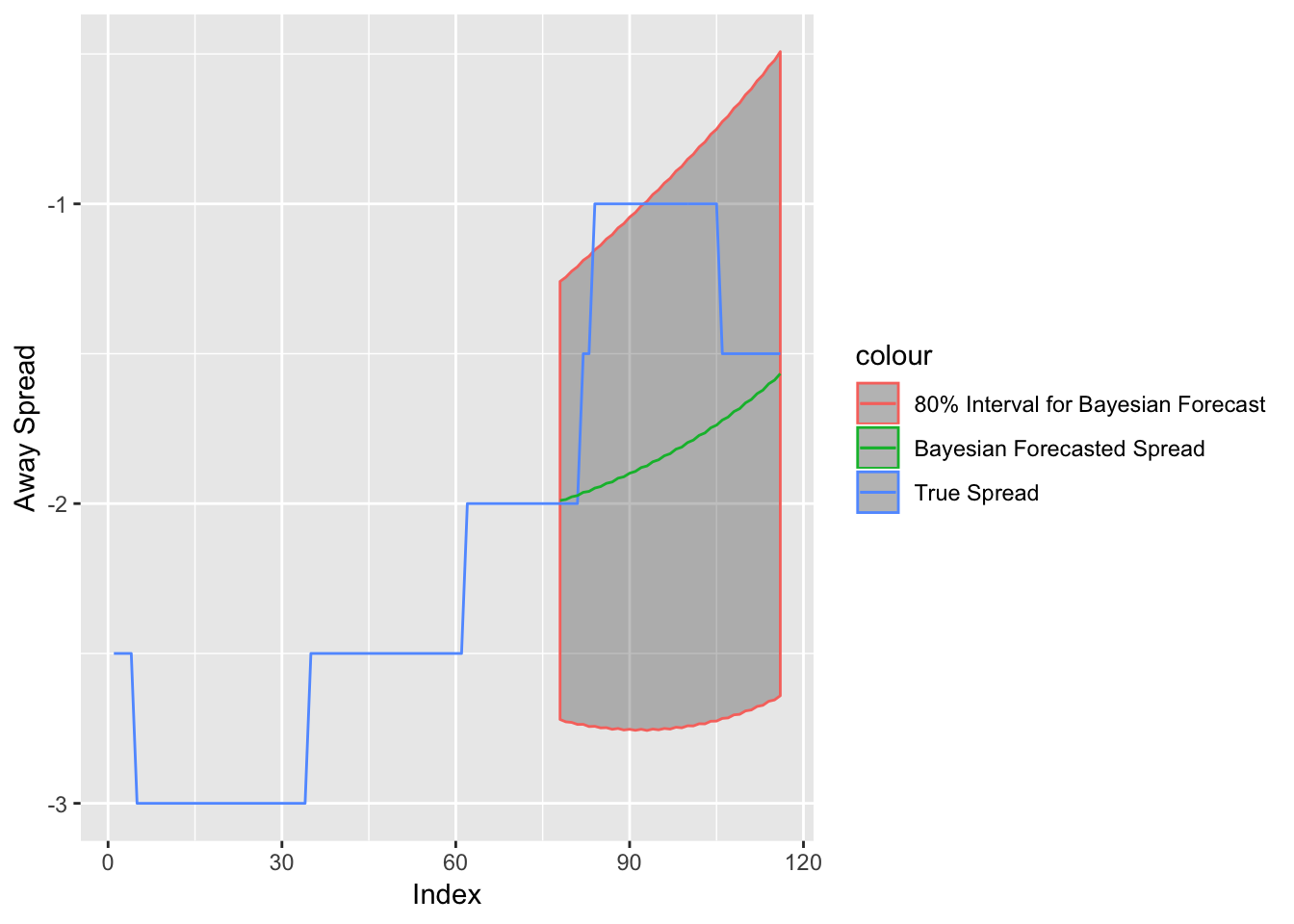

Figure 3.7: Spread versus Bayesian Forecast for Minnesota Vikings at Green Bay Packers Week 2, 2018 with 80% Confidence Interval

Figure 3.7 shows the Bayesian DLM forecast with a 80% confidence interval. I chose an 80% confidence based on trial and error. Here, while the spread at the decision point is within the 80% interval, there is a point when the spread reaches Vikings (-1) when the spread is out of the 80% interval. This will be a key distinction to make when it comes to betting strategies. Actually, this is why I chose an 80% confidence, as opposed to a more standard 95% confidence interval. With the wider 95% confidence interval, it is more rare for me to have a value outside of that interval. Since I make betting decisions based on whether the spread is within the selected interval, I use an interval that allows me to incorporate more instances of waiting to bet until the future spread moves to a more advantageous position. Also, while 95% confidence interval is more standard, the choice is as arbitrary as an 80% confidence interval.

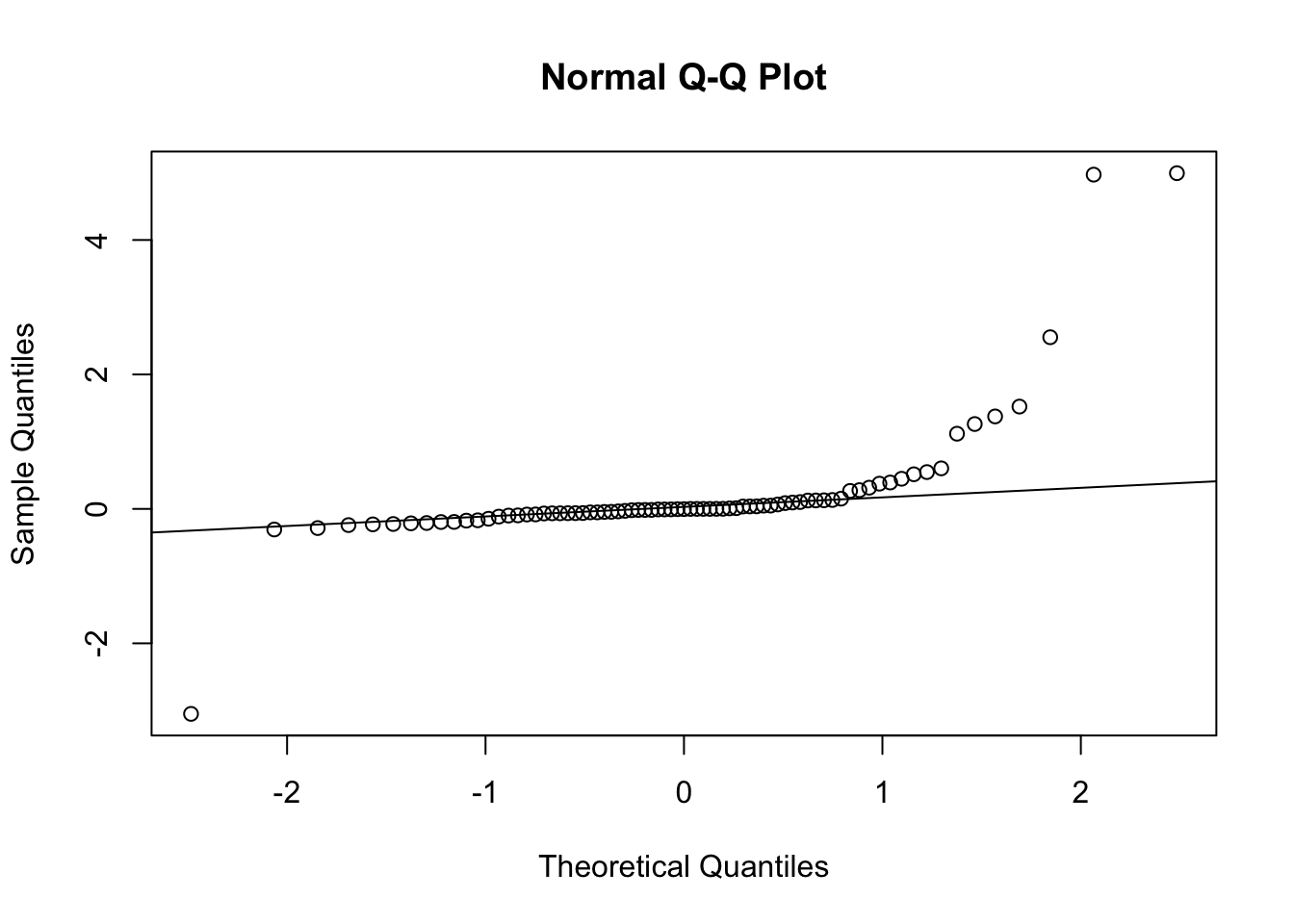

Figure 3.8: Normal QQ Plot for Residuals of the Forecasted Spread from the DLM

Figure 3.8 shows the residuals plot from the filtered distribution. The residuals do not seem to be completely normally distributed. This is due to the fact that the true spread can only move in increments of 0.5, which is a massive amount in terms of the jumps in the filtered values. When looking at the rounded values of the spread, however, the residuals are more likely to be normally distributed.

Figure 3.9: Diagnostic Plots for DLM of MIN at GB game

Figure 3.9 is the diagnostic plots for the Kalman-filtered model of the Minnesota Vikings at Green Bay Packers game. The p values for autocorrelation are all extremely high, indicating there is no autocorrelation. The residuals generally look like noise, with a few exceptions attributed to the nature of these data, and the ACF is within the bounds for all factors of the lag.

After building two models, I chose to use the forecasts from the best performing model. For each time-series, the error is the sum of the difference between each true spread and predicted spread. Each method had a vector of errors of 414 errors.

When looking at the error vectors, I removed 5 outliers where had error sums above 100 total points. It is interesting to note that both models had the same forecasts for some series’ – especially those with the largest errors. These massive errors that both models found are likely due to games that were affected extraordinary circumstances for which my model cannot account. I did not use the time-series predictions for these 5 games for my simulations either.

| gameID | Week | Year |

|---|---|---|

| PHIvJAC | 8 | 2018 |

| GBvDET | 5 | 2018 |

| CARvATL | 2 | 2018 |

| LARvTEN | 16 | 2017 |

| LACvJAC | 10 | 2017 |

Table 3.4 shows the five games that were excluded. After taking a brief look at these games, it is noteworthy that the PHIvJAC game was played in London at 9:30AM ET (6:30AM PT) on a Sunday. The odd start time could have caused odd betting patterns where there were way fewer bets in the last third of observations than normal. Typically the amount of cash increases more linearly. However, with such an early start time on a Sunday morning, combined with the fact that people often have plans on Saturday nights, there may be a massive influx of money very close to the start of the game, as people wake up just before the game starts – opposed to having a few hours to place bets before the game starts.

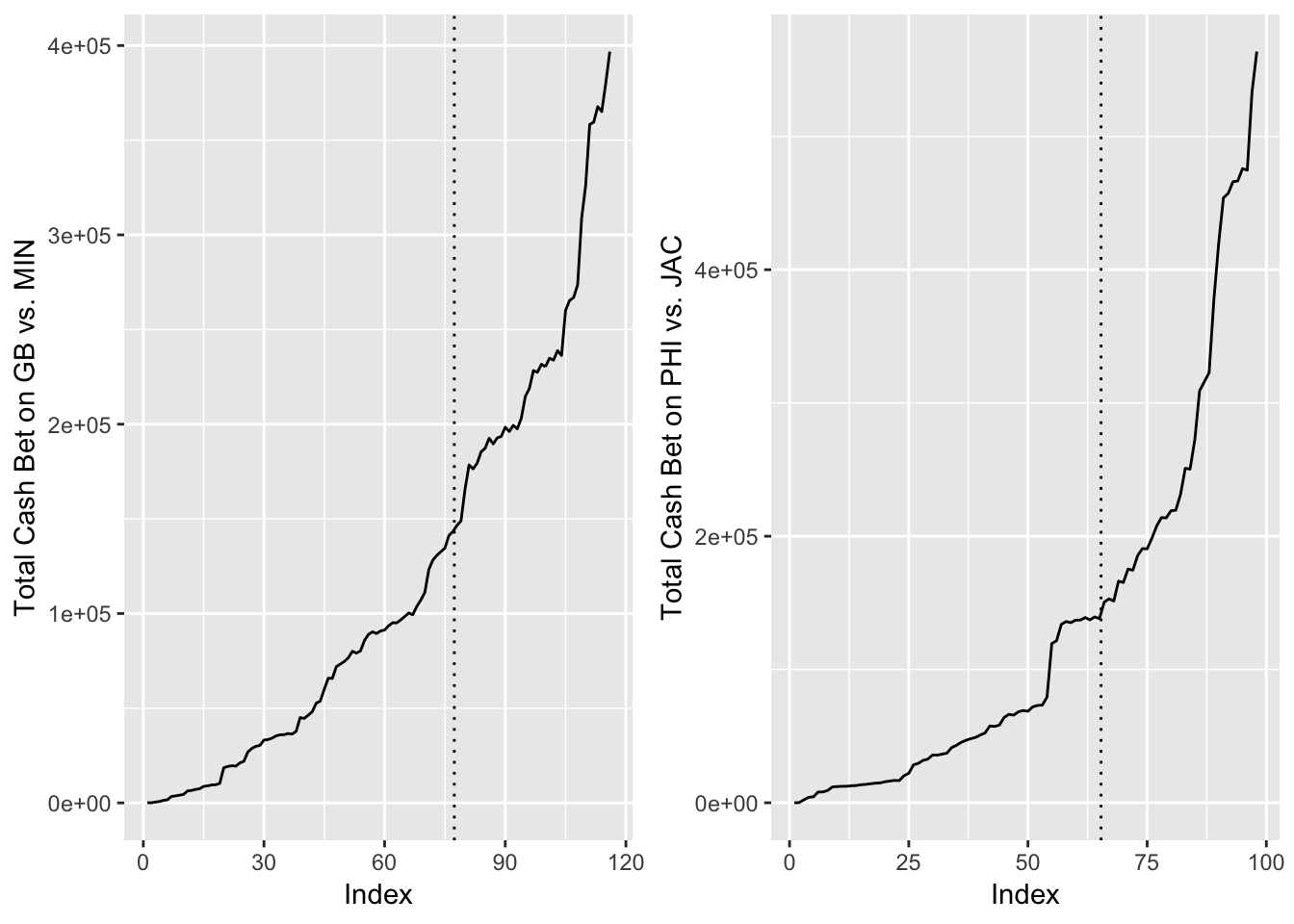

Figure 3.10: Total Cash Bet on GB vs. MIN (left) compared to PHI vs. JAC (right) Throughout the Week

Figure 3.10 represents the amount of cash bet throughout the week. The dotted line is the decision point. The charts show that the odd start time games have a significantly more massive exponential increase in the amount of money bet directly after the decision point. This makes these games tough to model. In addition, looking at the GB vs. DET game that was a massive outlier, star Green Bay Packers quarterback Aaron Rodgers was questionable to play throughout the week due to injury. He was finally announced as healthy late in the week. It is unclear the circumstances for the other three outliers.

| Min. | 1st Q | Median | Mean | 3rd Q | Max | |

|---|---|---|---|---|---|---|

| DLM Errors | -11.81761 | -0.5605623 | 0 | 0.0519290 | 0.4935073 | 38.05709 |

| Auto ARIMA Errors | -11.81761 | -0.5544576 | 0 | 0.0690394 | 0.4947444 | 38.05709 |

| DLM | Auto ARIMA | |

|---|---|---|

| Median Abs. Error | 0.5468115 | 0.7686323 |

| Mean Abs. Error | 1.4152842 | 3.4088224 |

Table 3.5 displays the summary statistics for my two vectors. these data shows that the DLM model has a lower mean error. In addition, when looking at simply absolute error, the Bayesian DLM approach provided a lower median absolute average error, as seen in Table 3.6, so I used this model’s forecasts for incorporating the future values from my decision point.

3.1.3 Modeling Number of Observations

To predict how many future points to forecast from a certain time \(t\), I built a simple linear regression model. I gathered ten equally spaced data points from each of my data sets. Each data point contains information on the amount of total cash, total number of tickets and number of observations up to time \(t\), as well as the number of final data points in this series. One row of this data frame is shown in Section 6.2 of the Appendix. I then built a simple mixed linear regression model to forecast the number of total data points in the series, so I could find how many points \(h\) I should use for forecasting at my decision point. While I considered using Poisson regression because the number of observations are a number of occurrences, the Poisson mixed linear and simple model did not fit the data as well as the linear mixed model, based on the diagnostics of the model. Equations — is the equation for this simple mixed model, with \(n_i\) representing the amount of final observations in the series, while \(n_t\) is the amount of observations up to time \(t\). Week is a factor and random effect (playoffs are treated here as week 0), as certain weeks attract more bettors than other weeks.

\[\begin{eqnarray} \notag &\text{for} \ j \ in \ 0 \ , \ . . . \ , \ 17 \\ &\hat{n_i} = \beta_{0} + \beta_{1} \cdot \log(\text{Total Cash Bet}) + \beta_{2} \cdot \log(\text{Total Tickets}) + \beta_{3} \cdot \text{n}_t + \alpha_j^{\text{week}} + \epsilon_i \label{eq:obsreg} \ \ \ \\ &\epsilon_i \sim N(0, \sigma^2_{\text{residuals}}) \label{eq:obsreg2} \\ &\alpha_j^{text{week}} \sim N(0, \sigma^2_{\text{week}}) \label{eq:obsreg3} \end{eqnarray}\]The coefficients and diagnostics for this model are also shown in Section 6.2 of the Appendix, as this is a less essential part of the greater goal of this thesis.

3.2 Game Result Prediction

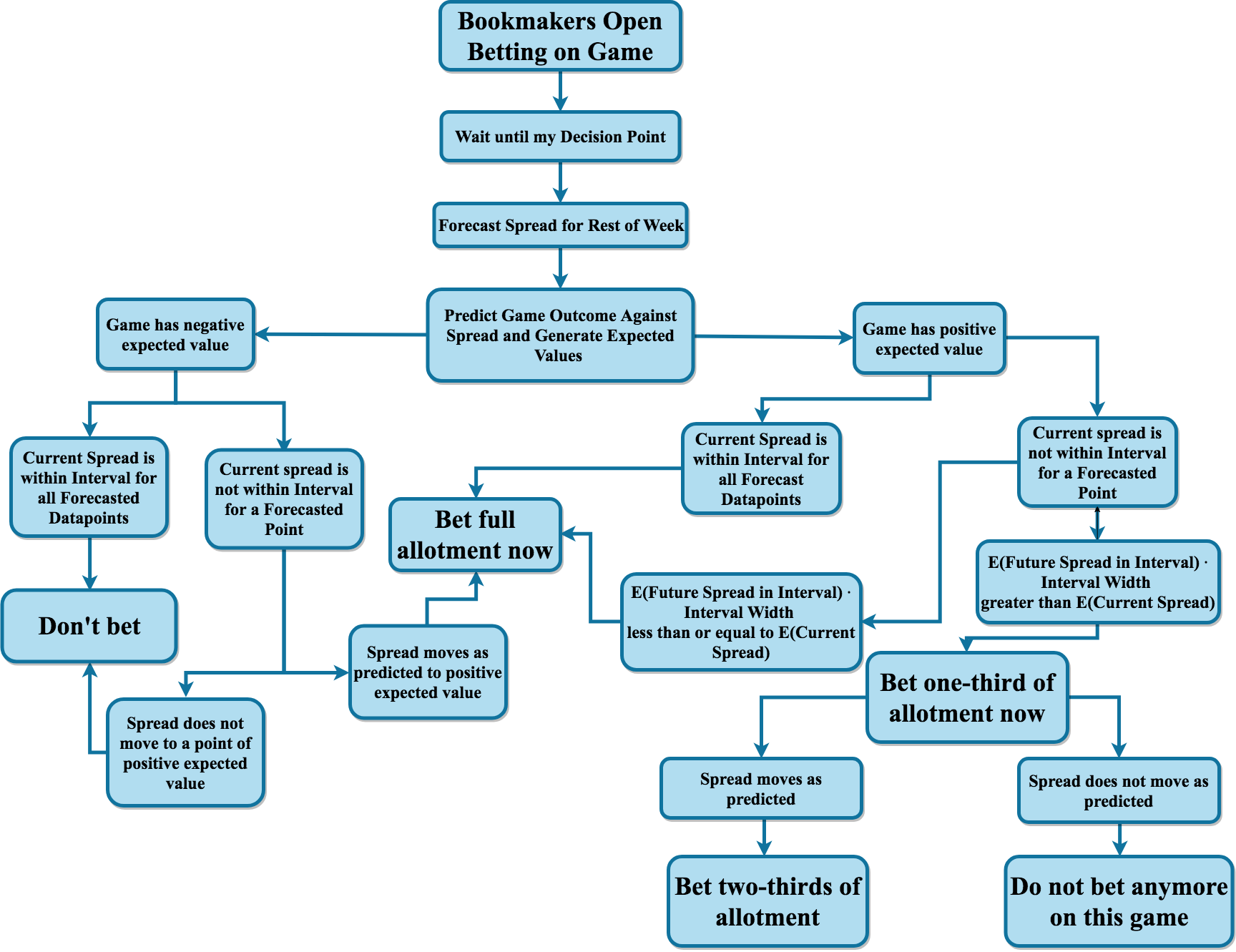

3.2.1 Overview of Decisions

Figure ?? shows a flow chart detailing the different possible scenarios and how much I would bet in each scenario. I use “allotment” to describe the betting amount because the many different betting strategies will bet different amounts for the same scenarios. The bookmakers open up betting on the game by placing an initial spread typically about a week before the game starts. I then wait until my decision point, forecast the spread for the rest of the week up until game time and provide a probability estimate for each team beating the spread. If betting on the game provides negative expected value based on the probability point estimate, I do not bet on the game, but I leave the opportunity open to bet later on in the week if a new, forecasted spread would make the advantageous to bet on. If the game has positive expected value, I place my bet on the game at the decision point. However, if the future forecasted spread projects a new spread that is even more advantageous to bet on, then I will only place a portion of my bet at the decision point and wait to place the rest of my bet. If the spread does in fact move as projected, I then place the rest of the bet the moment the spread hits my projections.

3.2.2 Exploratory Data Analysis

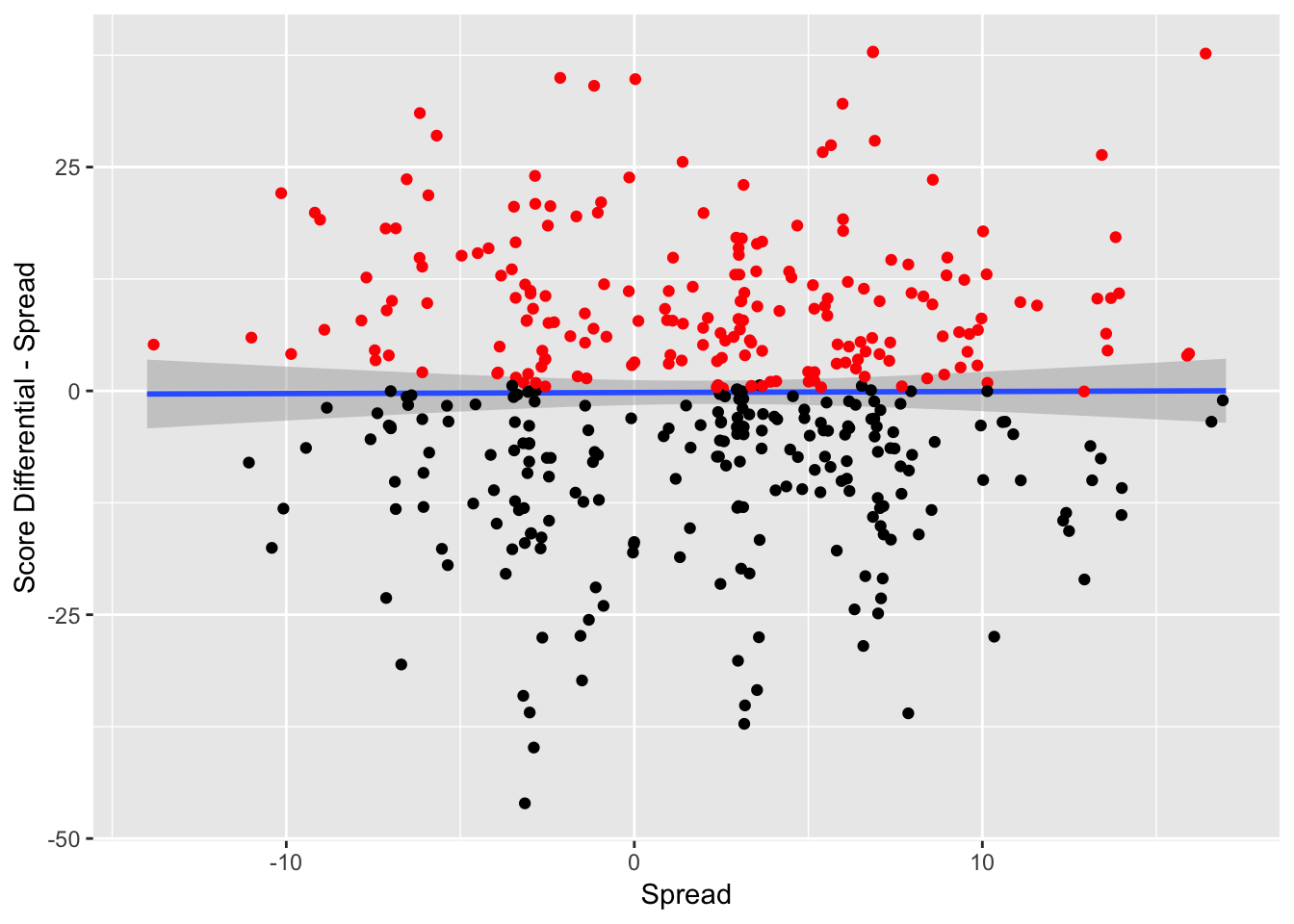

Figure 3.11: Game Result Against Spread vs. Spread – The red indicates that the team has beat the spread and the black indicates that the team has failed to beat the spread

Some key decisions determine whether the actual spread itself was a major factor in predicting team performance against the spread. In Figure 3.11, the y variable is the score differential during the game subtracted by the spread, in order to standardize the scores. For example, if the away team wins by 11 points, and the spread had the away team favored by 10 points, the y-variable in this scenario would be 1, as the away team performed one point better than the spread. The x variable is the spread. The red points are the observations where the away team covered the spread and the black points are the observations where the home team covered the spread.

The spread does not seem to have any impact on the team’s performance against the spread. This means that bookmakers do not have any dead zones in making spreads where a certain team is much more likely to beat the spread at a certain point. There do not seem to be any biases (either making spreads too small or too large), with respect to the spread and the performance.

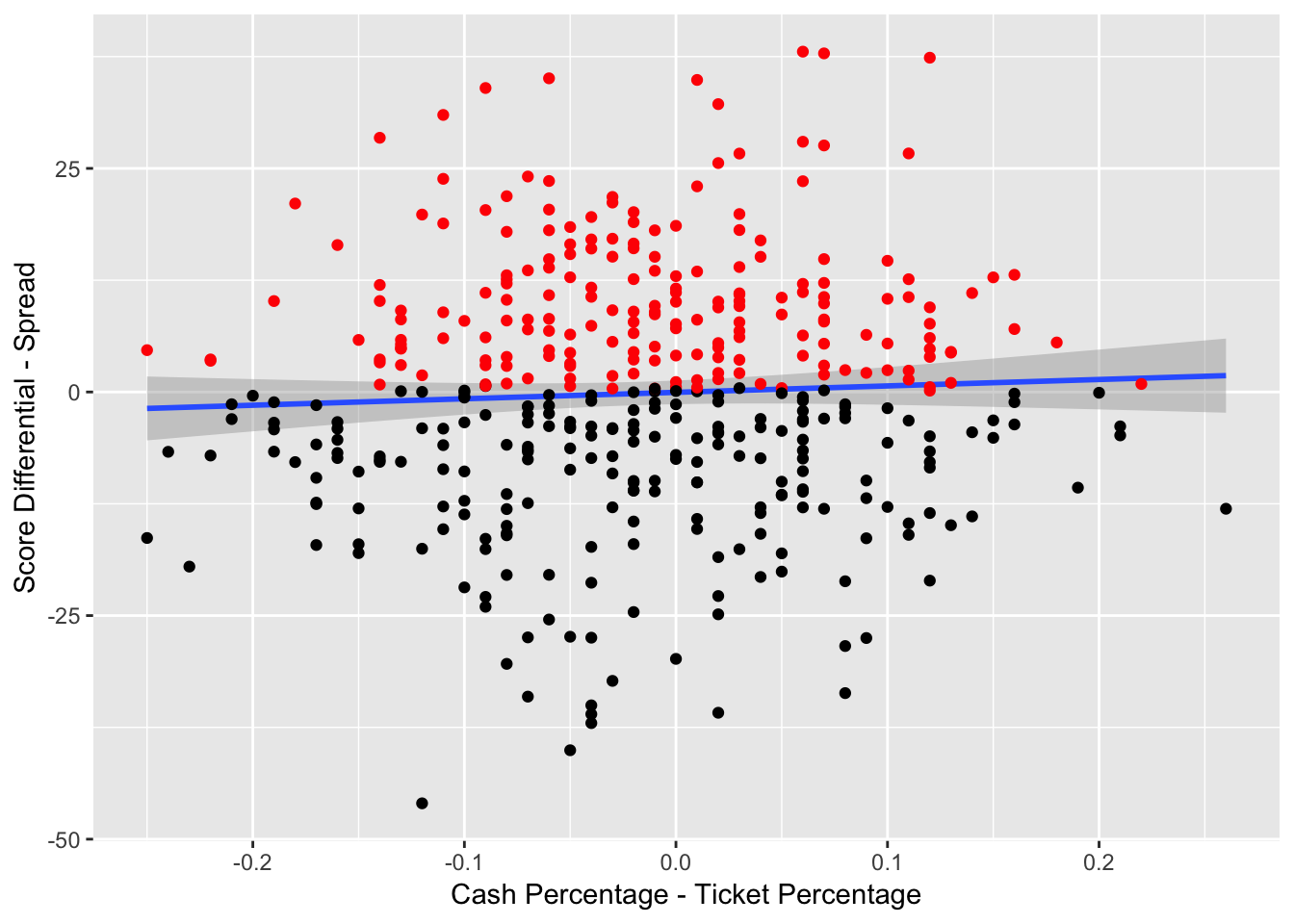

Figure 3.12: Result Against Spread vs. Cash-Ticket Percentage Difference – The red indicates that the team has beat the spread and the black indicates that the team has failed to beat the spread

Figure 3.12 examines the relationship between the cash and ticket percentages and the outcome against the spread. When there is a significantly higher percentage of cash bet on a team, in comparison to to the number of bets on a team, one of the teams is receiving larger bets. This is typically an indicator that professional bettors are betting on a team. Those who bet on sports for living tend to bet significantly more than those who bet recreationally, and the professional betters tend to be correct more often than the recreational betters.

From Figure 3.12, when the cash percentage rises, in comparison to the ticket percentage, the team tends to perform slightly better, with respect to the spread. This is an indication that the cash-ticket difference may be a useful indicator of performance.

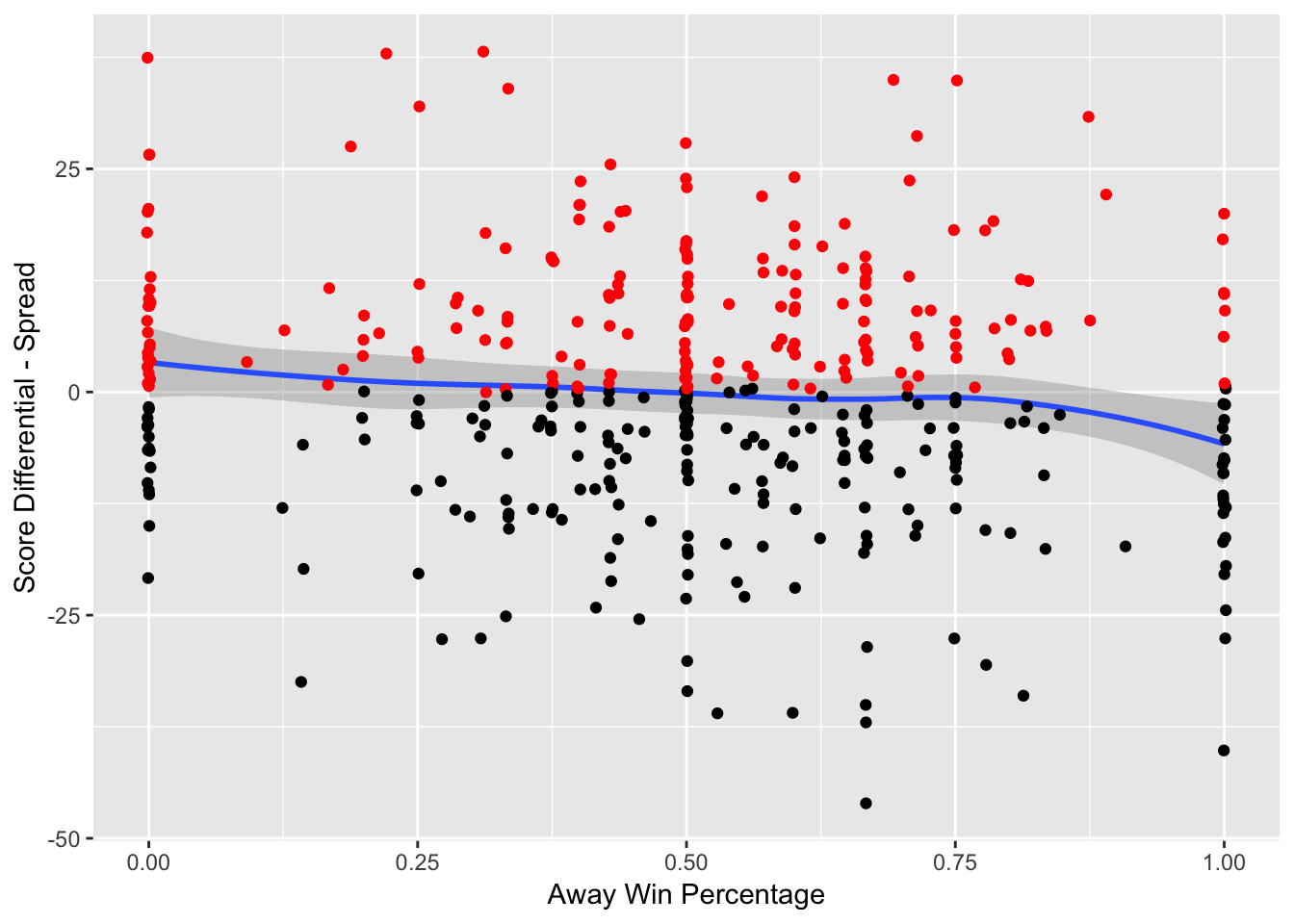

Figure 3.13: Game Result Against Spread vs. Away Win Percentage – The red indicates that the team has beat the spread and the black indicates that the team has failed to beat the spread

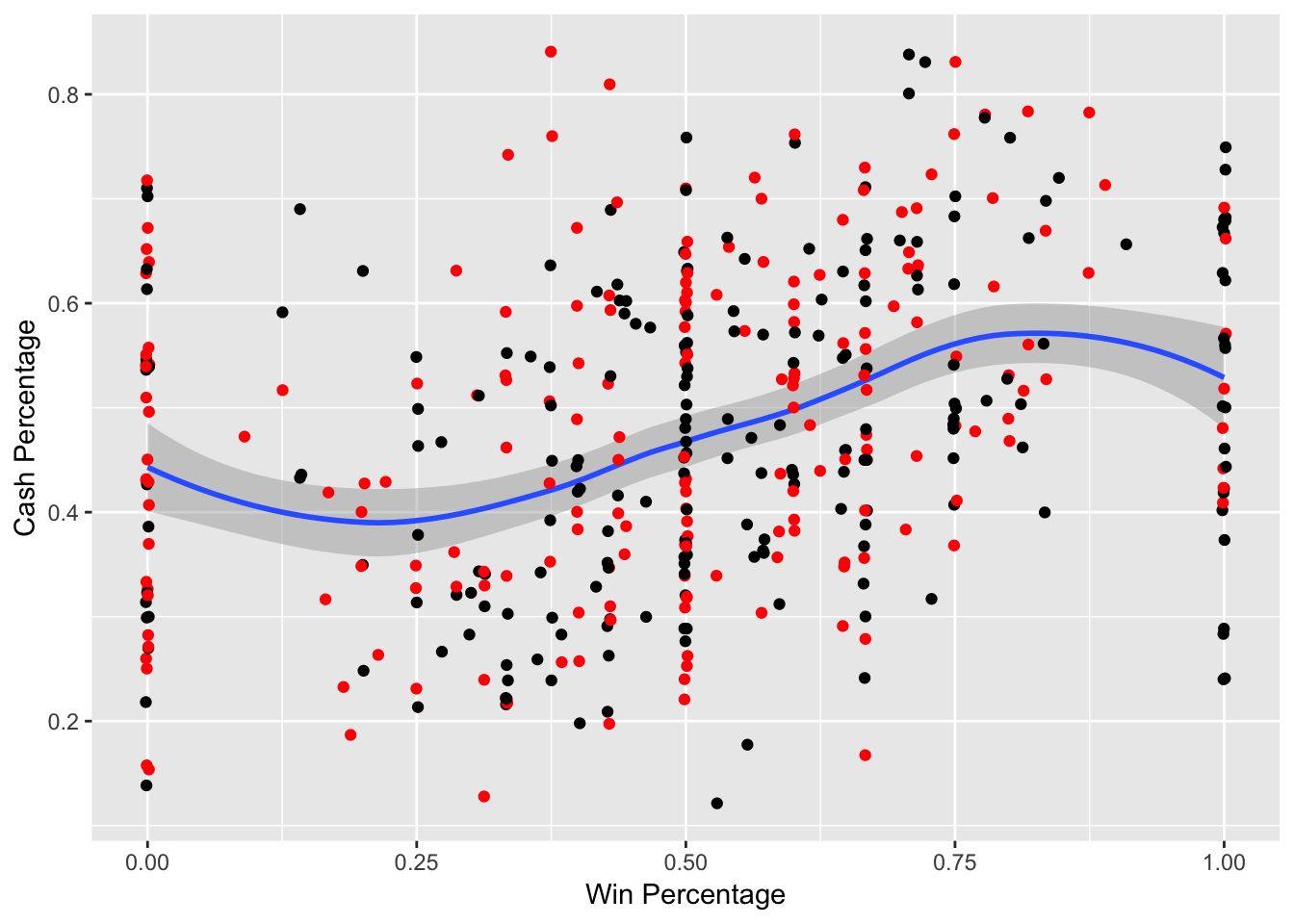

Figure 3.14: Cash Percentage vs. Win Percentage – The red indicates that the team has beat the spread and the black indicates that the team has failed to beat the spread

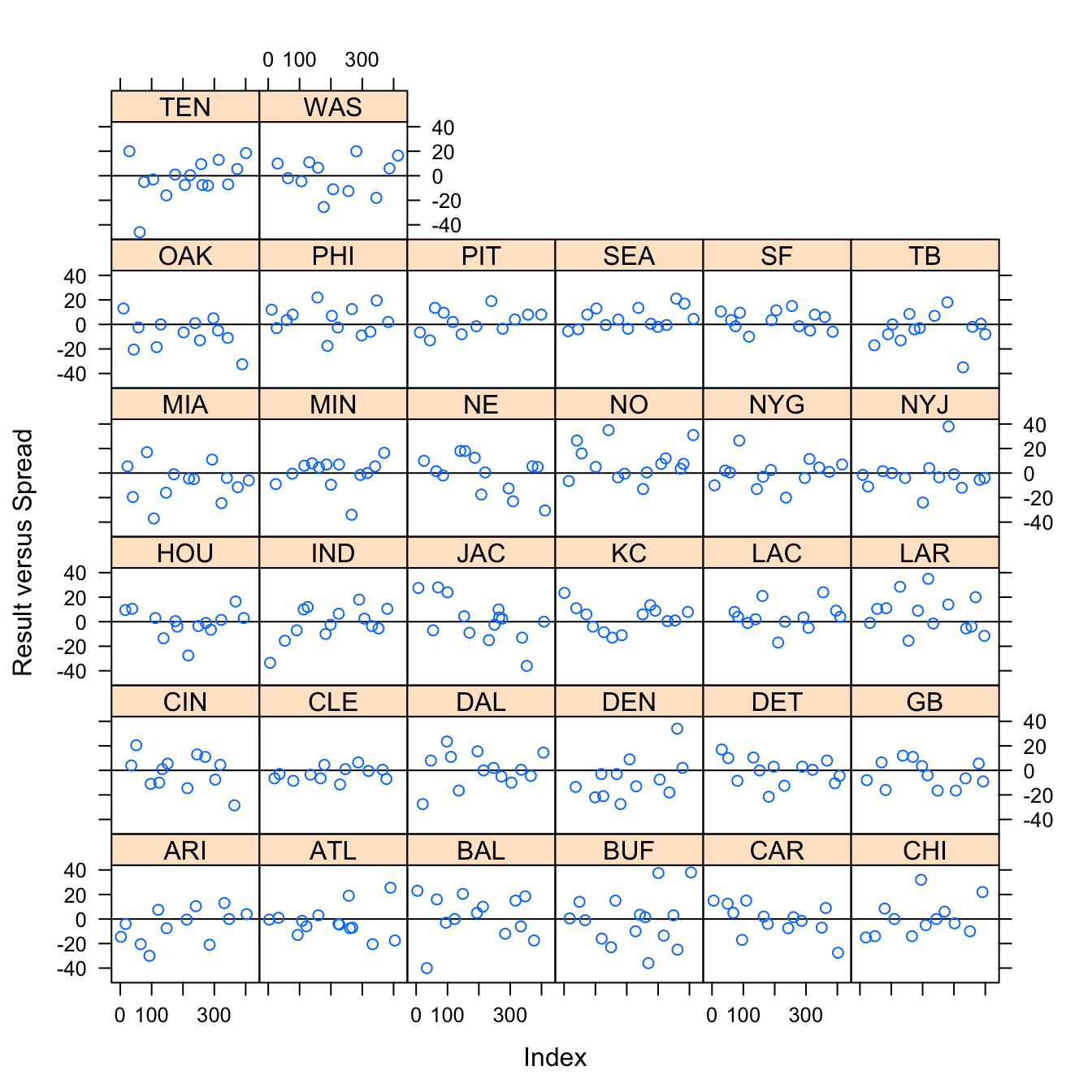

Figure 3.15: Result versus Spread by Away Team

Figure 3.13 shows a team’s performance against the spread relative to its current win percentage. The data shows that as the win percent rises for a team, its performance against the spread gets worse. This is indicative of the fact that many bettors overreact to past performance – especially when it comes to undefeated or winless teams, so the bookmakers will “shade” the lines against the more popular team. For example, if a team is 2-0, many bettors will overreact to a small sample size, and in order for the bookmakers to achieve equal amount of money on each team to guarantee themselves a profit, the bookmakers will move the line against the undefeated team. The opposite phenomena occurs for winless teams.

Figure 3.14 shows that as win percentage increases, the cash percentage tends to increase. At the edges with win percentages of 0% and 100%, this trend seems to slightly reverse. This is likely due to bookmakers shading the lines at such an extreme amount for these extreme win percentages, where they are able to achieve nearly equal action.

Figure 3.15 shows the result against the spread for each away team. There is great variation among all the teams, and while certain teams seemed to perform better against the spread, like the New Orleans Saints, treating the team as a random effect in modeling seems to suit the data.

3.2.3 Model Approach

There were a few different approaches to modeling that deserved consideration. Because scores are only in whole units, an ordinal regression model seemed as if it could have been appropriate. However, because there are an unbounded amount of levels, as well as the fact that there are so many levels – many of which have few data points – this approach would not have yielded appropriate results. A mixed linear model is a good approach to model these data with many different groups (the different teams). The downfall to this approach is that it does not give extra weight to the peaks in the score differences between games at 3 and 7, but still the score predictions would be more accurate than an inappropriately used ordinal regression model. Perhaps if there were tens of thousands of data points where each level would be represented numerous times, an ordinal regression would be more appropriate.

To first assess the best mixed linear models, the models were whittled down based on minimizing the BIC on the full dataset. After finding two models with similar BIC’s but different predictors, the models were compared through k-fold validation. There were a few metrics in this used: error rate between predicted results for the test set and the actual results, and then betting (and bankroll) performance across each of the simulations. The k-fold validation used 100 simulations in order to get a large distribution of bankroll amounts. But, if this k-fold validation was performed as usual, this would leave the test data sets with only 4 data points. Instead, the data was randomly shuffled for each of the 100 iterations, and then broken up into 7 folds – with one fold used as a test data set and the rest as a training dataset.

3.2.4 Simulations

For generating the simulated probabilities of beating the spread for each game in the test dataset, I generated 500 draws from its posterior predictive distribution for each model.

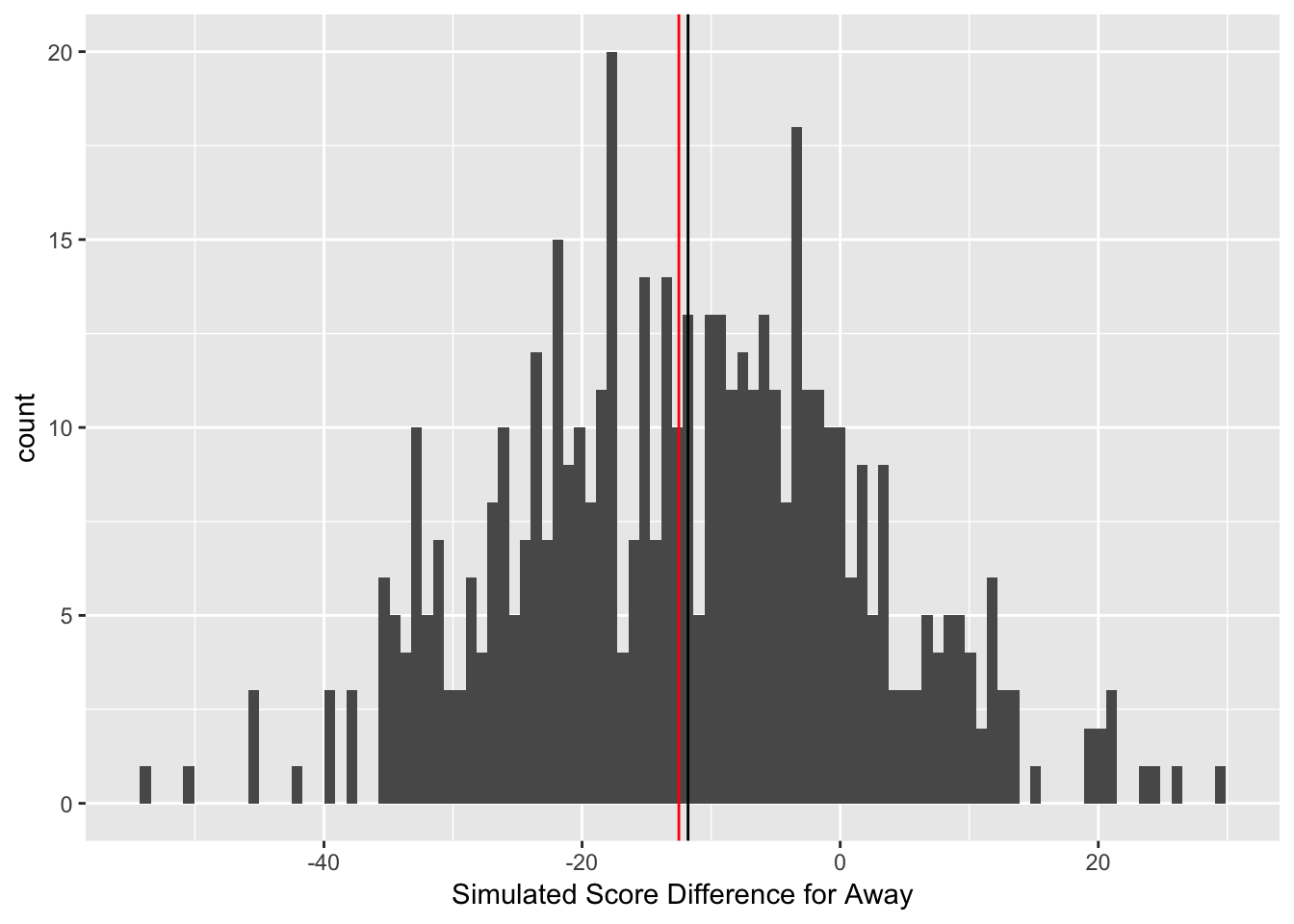

Figure 3.16: Simulated Outcomes for NYG @ Den Week 6, 2017

Figure 3.16 is a histogram representing the results of the 500 draws from the posterior predictive distribution from the best overall performing model (as will be discussed in section 3.2.5) for an example game in a test dataset for the New York Giants at the Denver Broncos during Week 6, 2017. The vertical black line represents the median of the 500 draws from the posterior predictive distribution, and the vertical red line represents the actual point spread. The median of the simulated outcomes (the vertical black line) is placed at -11.8, meaning the away team, the Giants, are expected to lose this game by 11.8 points. However, the spread (the vertical red line) at our first decision point has the Giants +12.5 points, meaning to beat the spread, the Giants must lose by 12 points or fewer, or win. Thus, at first glance, there seems to be a slight edge on betting on the New York Giants +12.5 because the spread has the Giants losing by 12.5 points, but the model projects the Giants to only lose by 11.8 points.

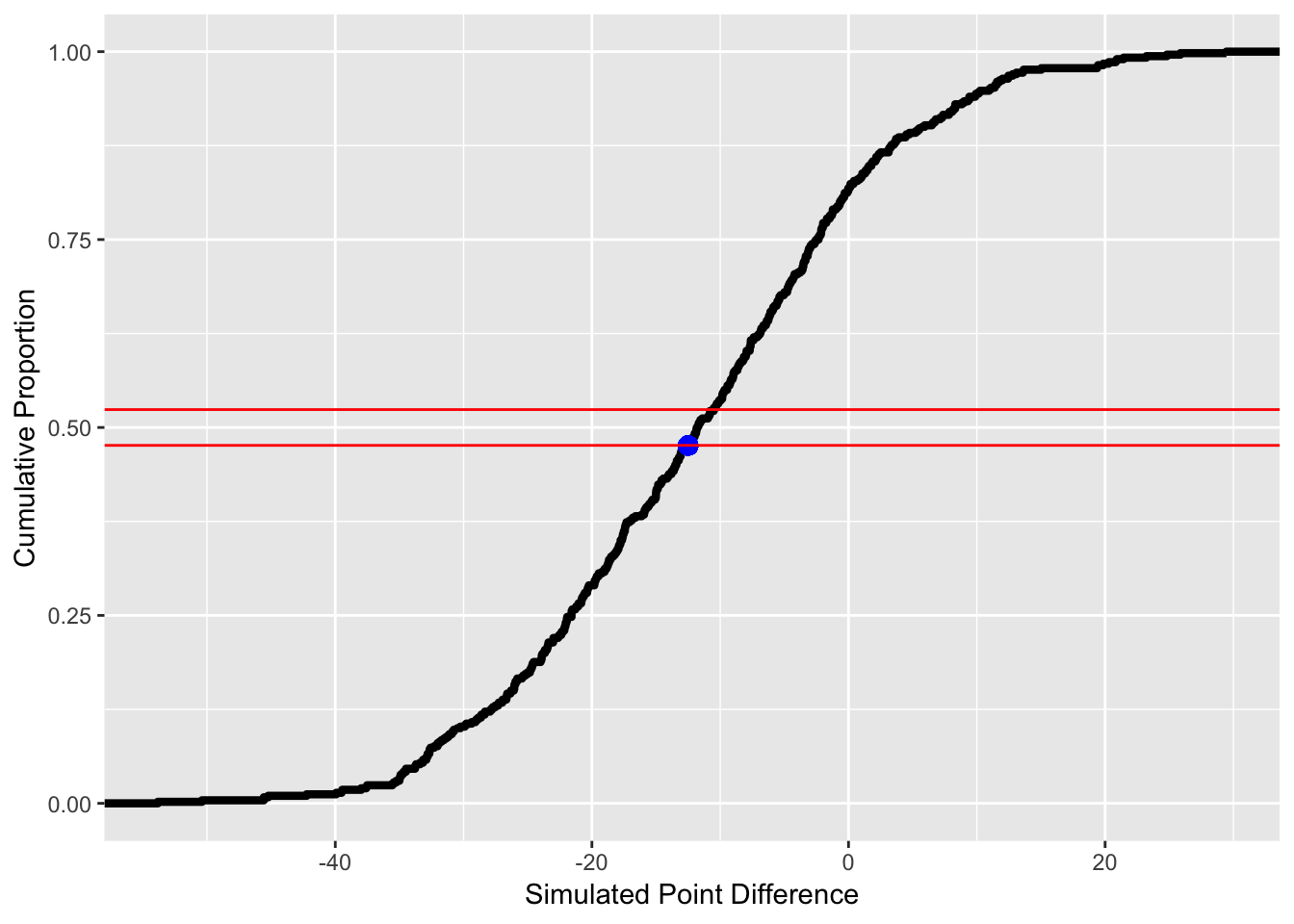

Figure 3.17: Empirical Cumulative Distribution of Simulated Outcomes for NYG @ DEN Week 6, 2017

Figure 3.17 is the empirical cumulative distribution (ECDF) of the 500 draws from the posterior predictive distribution. The blue represents where the point spread falls in the ECDF. Being either above or below the two redlines means that betting on this game will generate a positive expected value. If the point is below the lower redline, it is advantageous to bet on the away team, and if the point is above the top red line, then it is advantageous to bet on the home team. The interval of these red lines is (0.4762, 0.5238). If the ECDF is below 0.5, the probability of success is 1 - ECDF(Point Spread). Because the casino does not give fair odds, and offers -110 odds, where a bettor must stake 1.1 units to win 1 units, this interval of probabilities generates a negative expected value. The edges of the probability provide an expected value of 0. Expected value is calculated by adding the probability of failure multiplied by -1.1 (the amount of units lost if the bet loses) and the probability of success multiplied by 1 (the amount of units won if the bet wins). Equation is the equation for expected value.

\[\begin{equation} \label{eq:beatspread} P(\text{Beating Spread}) \cdot 1 + (1 - P(\text{Beating Spread})) \cdot -1.1. \end{equation}\]Now, to find the probability of success for each game, I found where on the ECDF of the draws from the posterior predictive distribution the current spread falls. For example, the ECDF for this point spread of Giants (+12.5) is \(0.478\), so the probability of the Giants beating the spread is \(1 - 0.478 = 0.522\). The model expects the Giants to beat the spread with a proportion of \(0.522\). The model expects the Broncos to beat the spread with a proportion of 0.478. Since the spread, in this scenario, is 12.5, and not a full number, there is no probability of a push, or tying the spread.

After generating a probability of success, the expected value can be calculated. Since one must bet 1.1 units to win 1 unit, the expected value is \(0.522 - ((1-0.522) \cdot 1.1) = -0.0038\). Betting on the Broncos is even more disadvantageous, as their expected value is \(0.478 - ((1-0.478) \cdot 1.1) = -0.0962\).

In this scenario, the model suggests a negative expected value of betting on the Giants with this spread of -0.0038 units lost per unit bet. There is a negative expected value for betting on both teams! So, because of the negative expected value, there will be no bet on the game at this point. However, the forecasted spread impacts whether there may be a bet at a future time point.

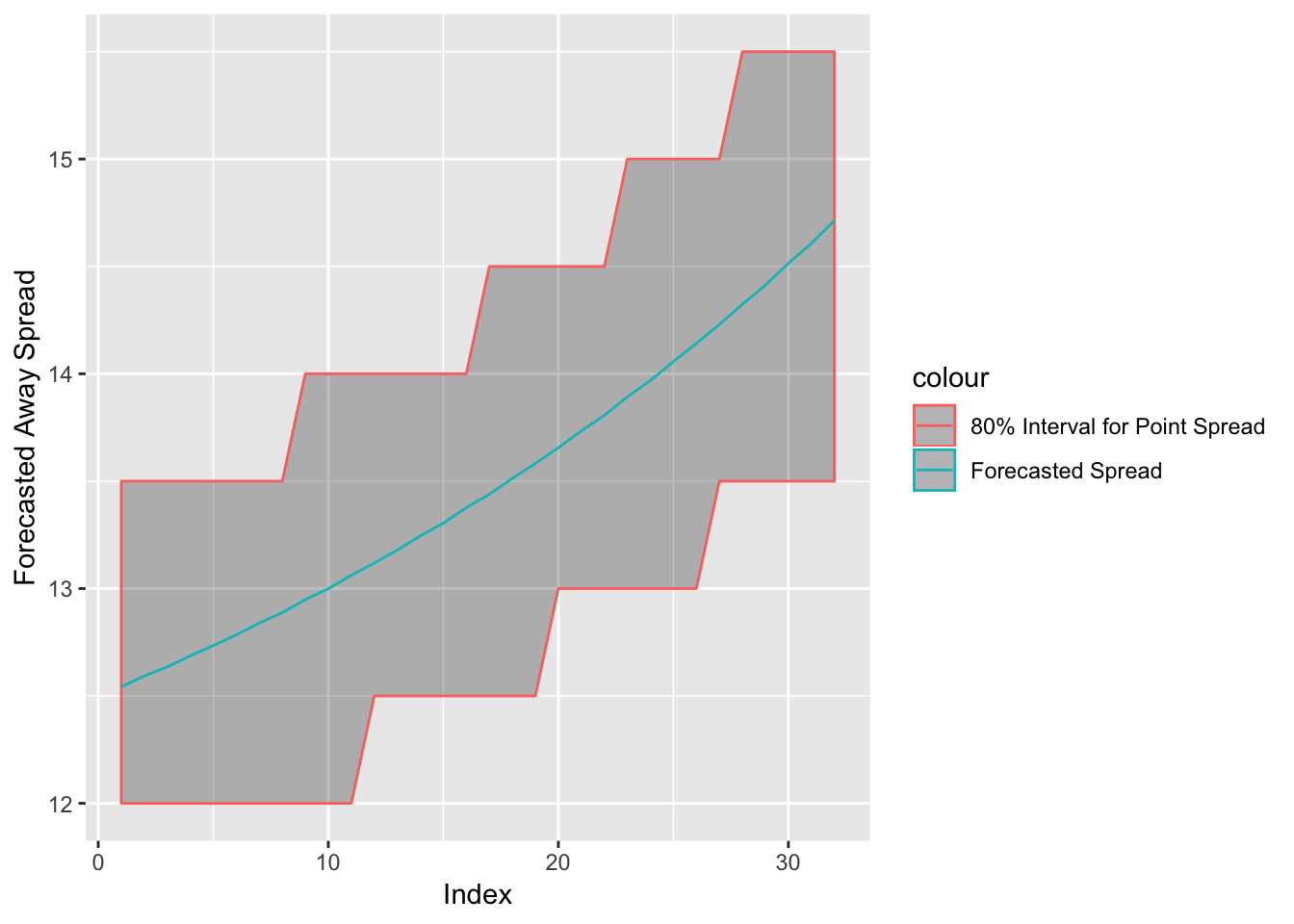

Figure 3.18: Forecasted Spread for the NYG @ DEN Week 6, 2017 with 80% Interval

Figure 3.18 shows the forecasted spread up until projected game time for the Giants and Broncos game. The 80% interval is using a rounded spread, to the nearest one-half, to calculate my interval. The forecasted spread predicts an 80% confidence interval of (13.0, 14.5) for this spread about 20 data points into the 32 point forecast. The current decision point spread of 12.5 is outside of this interval. The expected value changes once the spread enters my interval. The Empirical Cumulative Distribution for the Giants when the spread is Giants (+13) is 0.47 meaning the simulated probability is \(1 - 0.47 = 0.53\). The new expected value is \(0.53 - ((1-0.53) \times 1.1) = 0.013\). Thus, if the spread does move within my interval at any point, I will bet.

This is an extremely small edge. However, the spread does actually move to 13.0, so there would be a bet on the Giants. However, the 80% interval later moves even further to (13.5, 15.5) about 30 index points into the forecast. The new ECDF of Giants (+13.5) is 0.45, meaning the new simulated probability is 0.55 and the new expected value is 0.055. The level of confidence that the spread will move to Giants (+13.5) is only 80%, but \(0.055 \times 0.8 > 0.013\), so at the first point of positive expected value, I choose that my bet is only one-third of the total allotment. For example, if the bet allotment for this game is 15 units, I would place a 5 unit bet on the Giants (+13). The other two-thirds of the allotment will be placed if the spread enters my interval and hits 13.5. In actuality, the spread does move to Giants (+13.5). So, two-thirds of the bet allotment – or 10 units – is placed at Giants (+13.5).

This ended up being extremely important because the Giants actually lost the game by 13 points, so a bet on the Giants at (+12.5) would have lost money, while the 5 unit bet on the Giants +13 is a push, meaning the money is returned, and the 10 unit bet at Giants +13.5 wins and returns a profit of \((10 / 1.1) = 9.09\) units!

This was the process I went through for each game in the test data set for each model, as there were different probabilities of beating the spread from the two different models. For comparison, I used a simple method from a simple multiple linear regression, where the point estimate was generated directly through utilizing the mean and variance of the predicted value from the formula to calculate the t-value of the point spread and the using the t-distribution to find a probability estimate. One row of my test data set with the probabilities included is displayed in the Section ?? of the Appendix.

3.2.5 Model Selection

There were two models that provided similar results of BIC on the full datasets. Both models used the away team as a random effect, and used the decision point spread as a predictor. But, the first model delves into more team-specific stats, such as win percentages, number of wins and the weighted DVOA, in order to best predict who will win. I will refer to this model as the “team-specific” model. The second model tends to look more at the betting trends, such as the log of the tickets and cash bet for both the away and home team, and the difference between the cash and ticket percentage (this model also uses the difference between the teams’ weighted DVOA). for its predictors. The second model also uses the year as a random effect. This model will be referred to as the “betting-trends” model When looking to incorporate certain additional variables into the other models, the BIC rises.

The team-specific model is shown in Equations (??) — (??).

\[\begin{eqnarray} &\notag \text{for} \ i \ \text{in} \ 1 \ , \ ... \ , \ 414 \ \text{and} \ j \ \text{in} \ 1, \ ... \ , \ 32 \\ &\hat{\text{Away Score - Home Score}_i} = \alpha_{j [i]}^{\text{away team}} + \boldsymbol{\beta}^{'} \cdot \textbf{X}_i + \epsilon_i \label{eq:lmer11} \\ &\epsilon_i \sim N(0, \sigma^2_{\text{residuals}}) \label{eq:lmer12} \\ &\alpha^{\text{away team}}_{j} \sim N(0, \sigma^{2}_{\text{away team}}) \label{eq:lmer13} \end{eqnarray}\]

<table style="text-align:center"><tr><td colspan="2" style="border-bottom: 1px solid black"></td></tr><tr><td style="text-align:left"></td><td><em>Dependent variable:</em></td></tr>

<tr><td></td><td colspan="1" style="border-bottom: 1px solid black"></td></tr>

<tr><td style="text-align:left"></td><td>score_diff_away</td></tr>

<tr><td colspan="2" style="border-bottom: 1px solid black"></td></tr><tr><td style="text-align:left">poly(home.wins, 2)1</td><td>174.093</td></tr>

<tr><td style="text-align:left"></td><td>(113.876)</td></tr>

<tr><td style="text-align:left"></td><td></td></tr>

<tr><td style="text-align:left">poly(home.wins, 2)2</td><td>116.674<sup>*</sup></td></tr>

<tr><td style="text-align:left"></td><td>(68.643)</td></tr>

<tr><td style="text-align:left"></td><td></td></tr>

<tr><td style="text-align:left">poly(away.wins, 2)1</td><td>-90.551</td></tr>

<tr><td style="text-align:left"></td><td>(100.298)</td></tr>

<tr><td style="text-align:left"></td><td></td></tr>

<tr><td style="text-align:left">poly(away.wins, 2)2</td><td>-106.191<sup>*</sup></td></tr>

<tr><td style="text-align:left"></td><td>(62.894)</td></tr>

<tr><td style="text-align:left"></td><td></td></tr>

<tr><td style="text-align:left">first_decision_point_spread</td><td>-0.790<sup>***</sup></td></tr>

<tr><td style="text-align:left"></td><td>(0.179)</td></tr>

<tr><td style="text-align:left"></td><td></td></tr>

<tr><td style="text-align:left">home.winpercent</td><td>-5.878</td></tr>

<tr><td style="text-align:left"></td><td>(5.919)</td></tr>

<tr><td style="text-align:left"></td><td></td></tr>

<tr><td style="text-align:left">away.winpercent</td><td>-13.392<sup>**</sup></td></tr>

<tr><td style="text-align:left"></td><td>(5.983)</td></tr>

<tr><td style="text-align:left"></td><td></td></tr>

<tr><td style="text-align:left">away_WEI.dvoa</td><td>0.197<sup>***</sup></td></tr>

<tr><td style="text-align:left"></td><td>(0.070)</td></tr>

<tr><td style="text-align:left"></td><td></td></tr>

<tr><td style="text-align:left">poly(home.wins, 2)1:home.winpercent</td><td>-292.348<sup>*</sup></td></tr>

<tr><td style="text-align:left"></td><td>(150.945)</td></tr>

<tr><td style="text-align:left"></td><td></td></tr>

<tr><td style="text-align:left">poly(home.wins, 2)2:home.winpercent</td><td>-73.081</td></tr>

<tr><td style="text-align:left"></td><td>(79.646)</td></tr>

<tr><td style="text-align:left"></td><td></td></tr>

<tr><td style="text-align:left">poly(away.wins, 2)1:away.winpercent</td><td>182.016</td></tr>

<tr><td style="text-align:left"></td><td>(141.167)</td></tr>

<tr><td style="text-align:left"></td><td></td></tr>

<tr><td style="text-align:left">poly(away.wins, 2)2:away.winpercent</td><td>86.750</td></tr>

<tr><td style="text-align:left"></td><td>(82.067)</td></tr>

<tr><td style="text-align:left"></td><td></td></tr>

<tr><td style="text-align:left">Constant</td><td>9.993<sup>***</sup></td></tr>

<tr><td style="text-align:left"></td><td>(3.839)</td></tr>

<tr><td style="text-align:left"></td><td></td></tr>

<tr><td colspan="2" style="border-bottom: 1px solid black"></td></tr><tr><td style="text-align:left">Observations</td><td>414</td></tr>

<tr><td style="text-align:left">Log Likelihood</td><td>-1,616.151</td></tr>

<tr><td style="text-align:left">Akaike Inf. Crit.</td><td>3,262.301</td></tr>

<tr><td style="text-align:left">Bayesian Inf. Crit.</td><td>3,322.689</td></tr>

<tr><td colspan="2" style="border-bottom: 1px solid black"></td></tr><tr><td style="text-align:left"><em>Note:</em></td><td style="text-align:right"><sup>*</sup>p<0.1; <sup>**</sup>p<0.05; <sup>***</sup>p<0.01</td></tr>

</table>

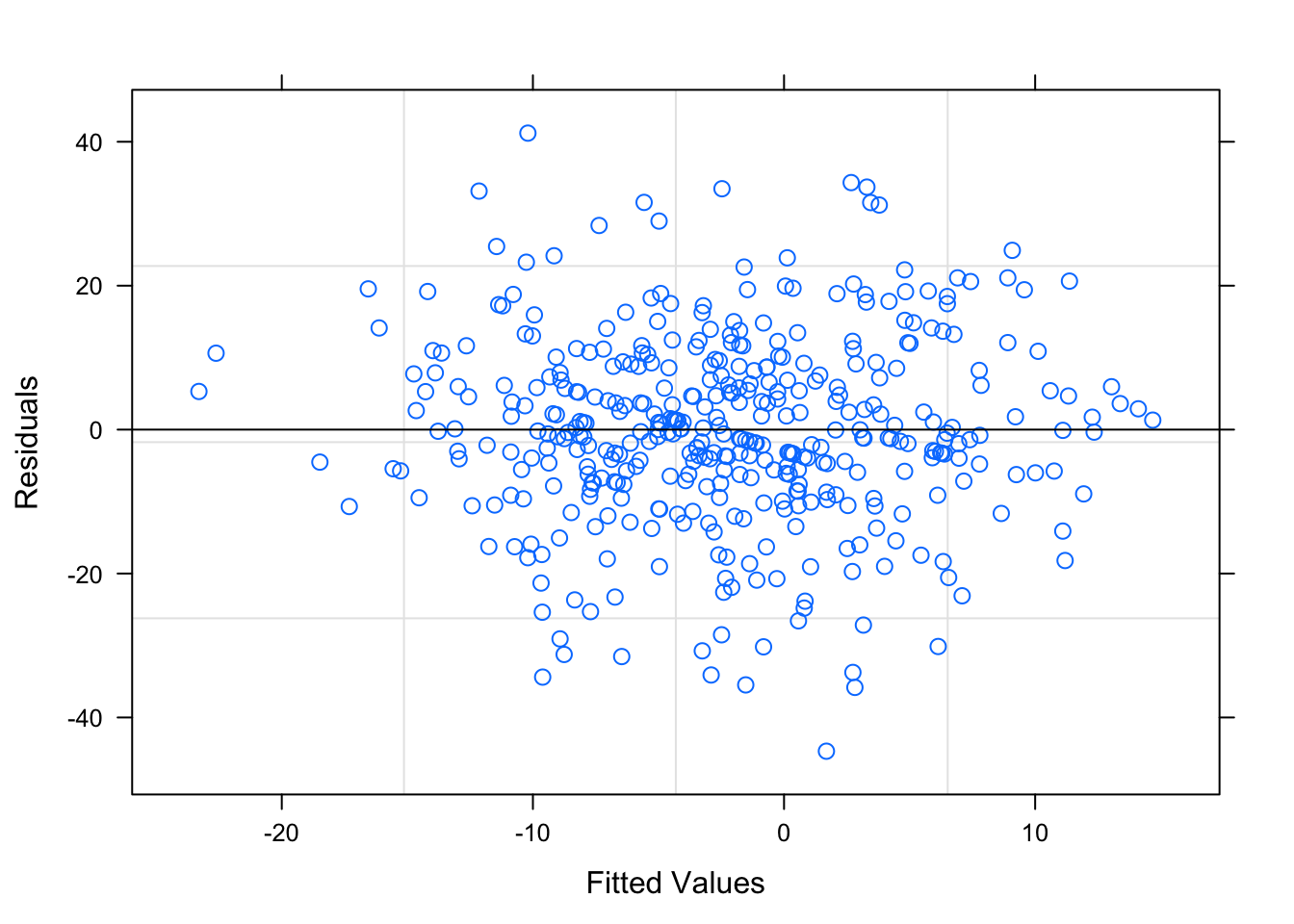

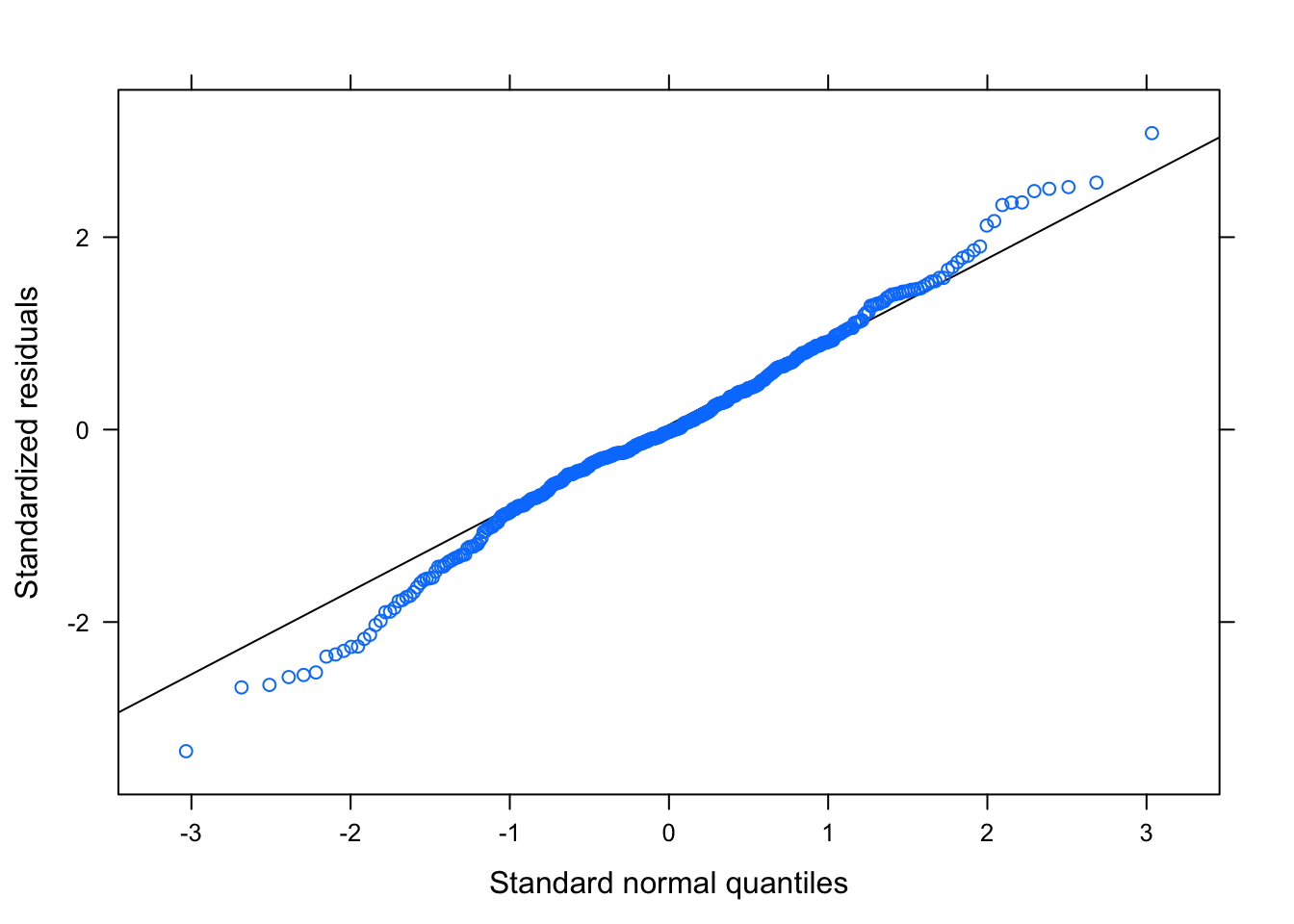

Figure 3.19: Residual Plots for Team-Specific Model

Table ?? is the output parameters for the Team-Specific model. Figure 3.19 is the diagnostic plots for this first model, and the model seems to pass all the diagnostic tests. The residuals tend to be random and non-correlated; the residual plots based on the groups are shown in Section ?? of the Appendix, but there are no egregious errors.

The model focusing on betting trends is shown in Equations — :

\[\begin{eqnarray} &\notag \text{for} \ i \ \text{in} \ 1 \ , \ ... \ , \ 414\text{;} \ j \ \text{in} \ 1, \ ... \ , \ 32 \ \text{and} \ m \ \text{in} \ 2017, 2018 \\ &\hat{\text{Away Score - Home Score}_i} = \alpha_{j [i]}^{\text{away team}} + \alpha_{m [i]}^{\text{Year}} + \boldsymbol{\beta}^{'} \cdot \textbf{X}_i + \epsilon_i \label{eq:lmer21} \\ &\epsilon_i \sim N(0, \sigma^2_{\text{residuals}}) \label{eq:lmer22} \\ &\alpha^{\text{away team}}_{j} \sim N(0, \sigma^{2}_{\text{away team}}) \label{eq:lmer23} \\ &\alpha^{Year}_{m} \sim N(0, \sigma^{2}_{\text{Year}}) \label{eq:lmer24} \end{eqnarray}\]

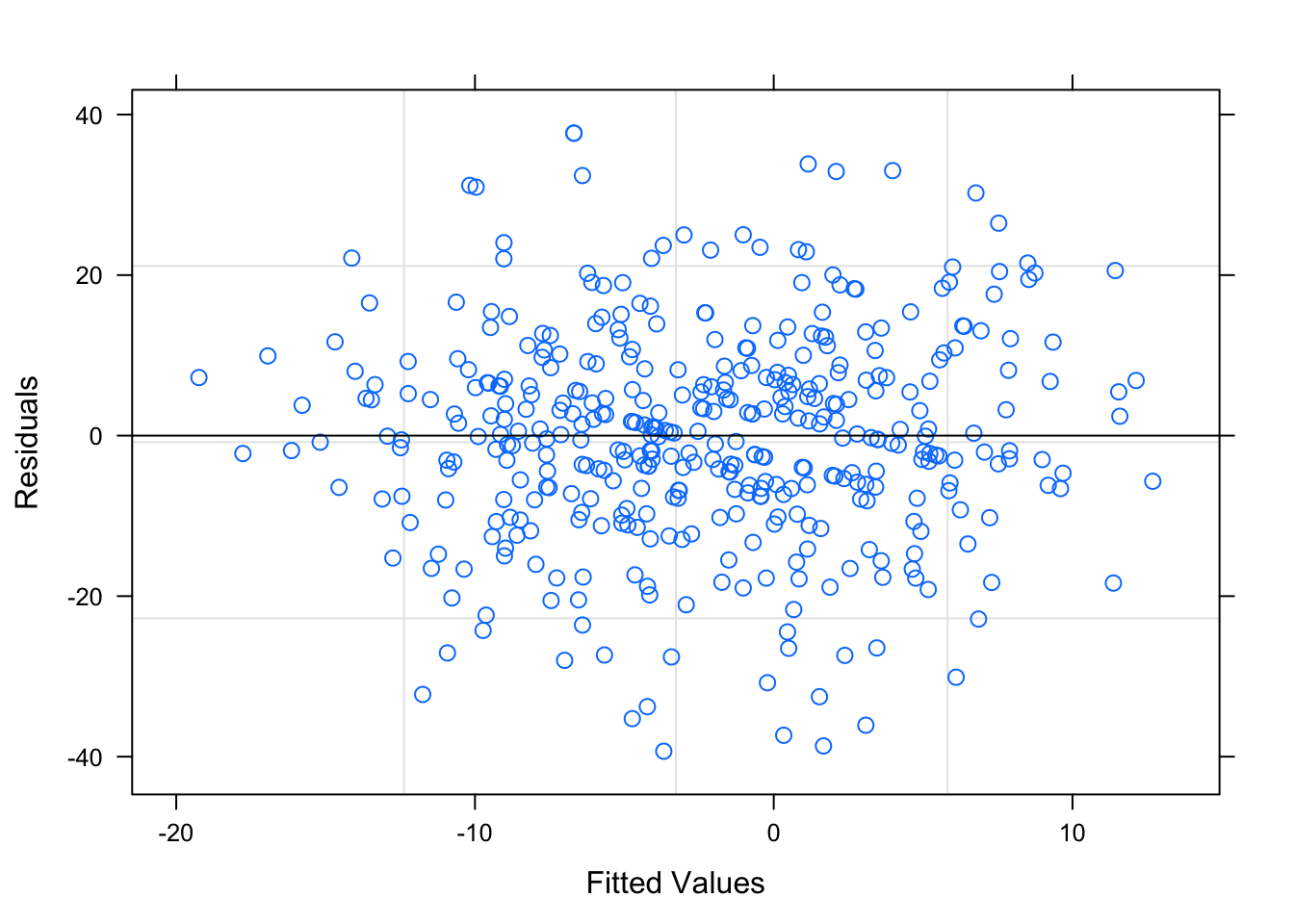

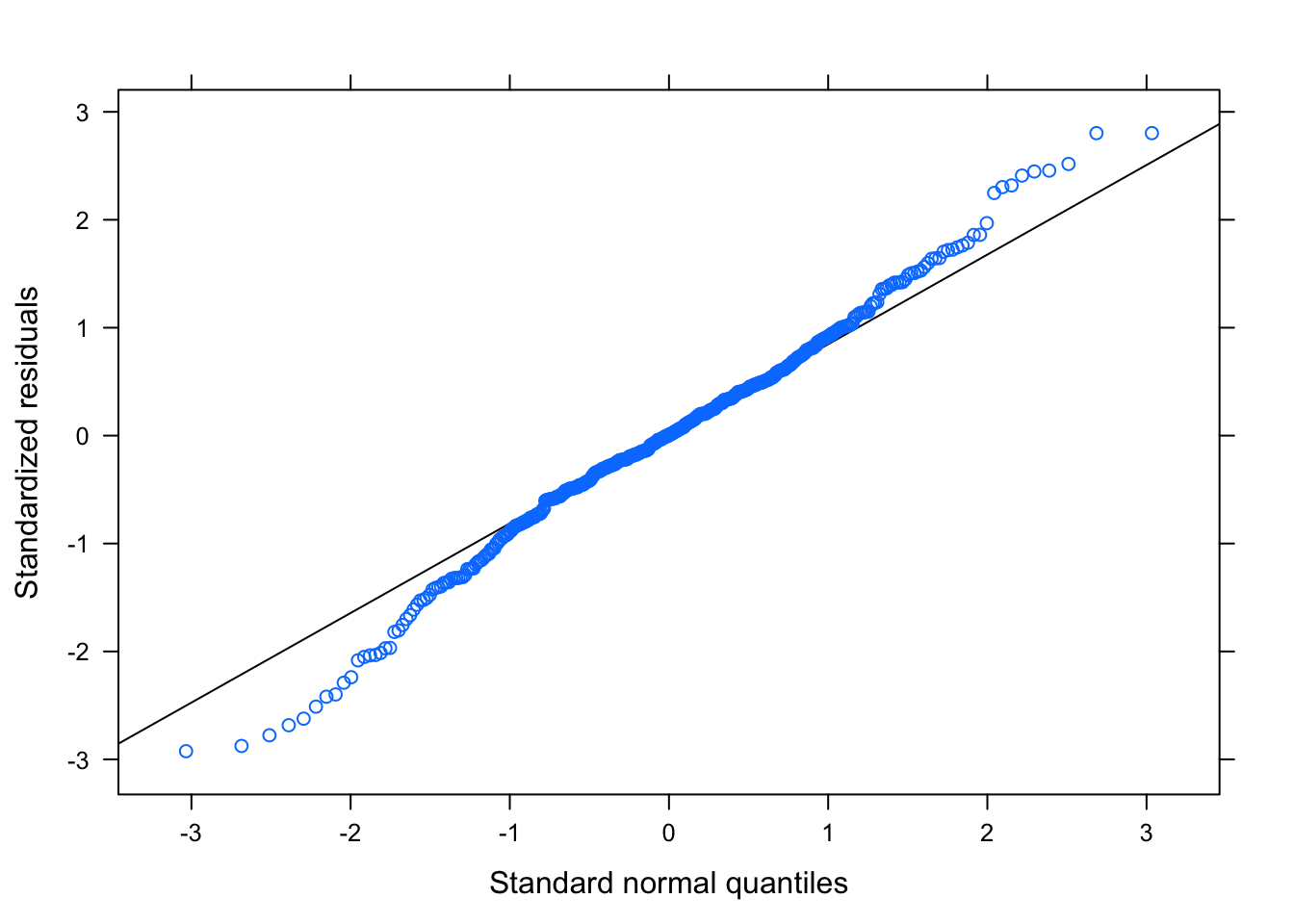

Figure 3.20: Residual Plots for Betting-Trends Model

Table ?? displays the parameters for the Betting-Trend model. This model also seems to pass all the diagnostic tests, shown in Figure 3.20, as the residuals tend to be random and non-correlated. The residual plots based on the groups are shown in Section ?? of the Appendix, but there are no egregious errors.

The mixed-linear models are appropriate for modeling these data, and k-fold validation using 100 test data sets is used to evaluate the models. It is possible that the models have different strengths and weaknesses, in terms of risk and reward, and this can be examined through looking at the distribution of winnings.